Learn CUTLASS the hard way!

Walkthrough of optimization techniques for GEMMs from a naive fp32 kernel to CUTLASS bf16 kernel

I have been curious about learning the details of GEMMs beyond the basics, i.e., the typical shared memory cache kernel from the PMPP book. There are so many more concepts to learn to understand modern GEMMs, and all of the information is distributed across blog posts, conference talks, etc.

If one is remotely interested in CUDA or Triton programming, they have likely come across this gemm of a post (pun intended) by Simon Boehm, but it was primarily focused on fp32 kernels. The majority of workloads today have shifted to lower precision matmuls such as bf16, fp8, mxfp8, etc. In this post, I’ll walk through several of these optimization stages and go into some detail on tensor cores, WMMA, swizzling, pipelining, autotuning, etc. Pranjal Shankhodhar’s Outperforming cuBLAS on H100 and Aleksa Gordić’s Inside NVIDIA GPUs are two recent posts that I encountered while writing this blog post, and they are really good as well!

My goal was originally to understand a basic CUTLASS kernel. The problem with looking at something like CUTLASS without knowing all the basics is that you will understand the code but not what it is doing under the hood. So, I decided to follow a process to work up to a basic CUTLASS kernel and try to beat PyTorch matmul on my RTX 4090. The whole process and the blog post took me about a month or so and was definitely a rewarding experience!

Prologue

Code

Full implementation of all kernels

In the following sections, we will go over several steps in optimizing GEMM kernel, each time discussing a new concept. Initial sections will look closely into several things Simon covered in his blog post as well. In the later sections, we will look at fp16/bf16 matmul kernels and tensor cores. In the end, we’ll look into CUTLASS kernels and try to optimize/tune it to get the best performance that we can get.

I used Claude Code to create some of the javascript visualizations in this post. I hope readers find them useful. It is not an endorsment of Claude Code but yeah it was pretty good at a lot of javascript tasks. Claude Code was also great at writing some of the python scripts to do plotly graphs for performance benchmarking!

GEMM Basics

GEMM (General Matrix Multiply) is a fundamental operation defined as:

\[C = \alpha AB + \beta C\]where:

- $A$ is an $M \times K$ matrix

- $B$ is a $K \times N$ matrix

- $C$ is an $M \times N$ matrix (both input and output)

- $\alpha$ and $\beta$ are scalar coefficients

- The standard matrix product $C = AB$ is a special case with $\alpha = 1$ and $\beta = 0$

- When $\beta \neq 0$, GEMM accumulates into a pre-existing matrix $C$

- This formulation also enables fused operations, avoiding separate kernel launches. We’ll see this in CUTLASS section.

Computational Complexity

Each element $C[i,j]$ requires a dot product:

\[C[i,j] = \alpha \sum_{k=0}^{K-1} A[i,k] \times B[k,j] + \beta C[i,j]\]For matrices of size $M \times K$, $K \times N$:

- Total dot products: $M \times N$

- Operations per dot product: $2K$ (K multiplies + K adds) + 3 scalar ops for linear transformation with $\alpha$, $\beta$

- Total FLOPs: $2MNK + MK$ (dominated by dot products)

For a $4096 \times 4096$ matrix multiplication ($M = N = K = 4096$):

- Total operations: $2 \times 4096^3 \approx 137$ GFLOPs

- Memory required: $3 \times 4096^2 \times 4$ bytes $\approx$ 201 MB (float32)

Hardware Specifications

All benchmarks in this post were run on NVIDIA GeForce RTX 4090. Below are the key specifications:

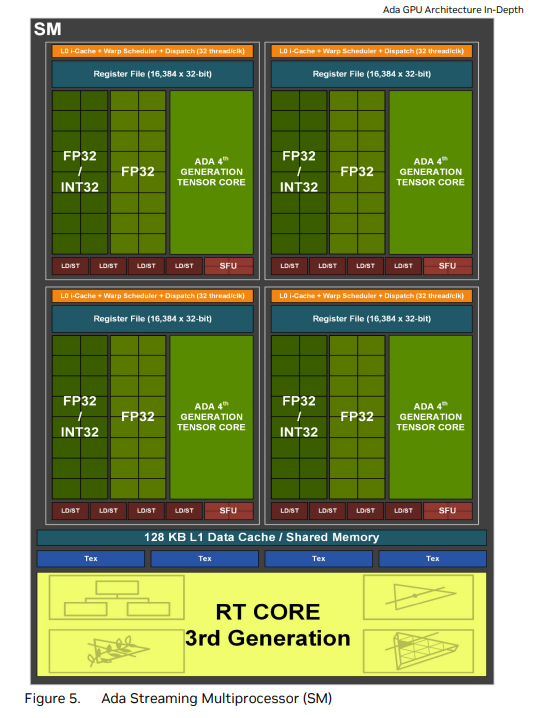

SM Architecture

| Specification | RTX 4090 (Ada Lovelace) |

|---|---|

| Architecture | Ada Lovelace |

| CUDA Cores | 16,384 |

| Streaming Multiprocessors (SMs) | 128 |

| FP32 Performance | 82.6 TFLOPS |

| Tensor Cores | 512 (4th Gen) |

| Tensor Performance (FP8) | 660.6 TFLOPS |

| RT Cores | 128 (3rd Gen) |

| Memory Size | 24 GB GDDR6X |

| Memory Bandwidth | 1,008 GB/s |

| L1 Cache / Shared Memory (Total) | 16,384 KB (16 MB) |

| L2 Cache | 72 MB |

| Shared Memory per SM | 128 KB |

| Registers per SM | 256 KB |

Source: NVIDIA Ada GPU Architecture Whitepaper

You can load these details directly using cudaDeviceProp as well:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

std::cout << "Number of CUDA devices: " << deviceCount << "\n\n";

for (int i = 0; i < deviceCount; ++i) {

cudaDeviceProp prop;

cudaGetDeviceProperties(&prop, i);

std::cout << "Device " << i << ": " << prop.name << "\n";

std::cout << " Compute capability: " << prop.major << "." << prop.minor << "\n";

std::cout << " Total global memory: " << (prop.totalGlobalMem >> 20) << " MB\n";

std::cout << " Shared memory per block: " << prop.sharedMemPerBlock << " bytes\n";

std::cout << " Shared memory per SM: " << prop.sharedMemPerMultiprocessor << " bytes\n";

std::cout << " Registers per block: " << prop.regsPerBlock << "\n";

std::cout << " Warp size: " << prop.warpSize << "\n";

std::cout << " Max threads per block: " << prop.maxThreadsPerBlock << "\n";

std::cout << " Max threads per SM: " << prop.maxThreadsPerMultiProcessor << "\n";

std::cout << " Number of SMs: " << prop.multiProcessorCount << "\n";

std::cout << " Max blocks per SM: " << prop.maxBlocksPerMultiProcessor << "\n";

std::cout << " Max grid dimensions: ["

<< prop.maxGridSize[0] << ", "

<< prop.maxGridSize[1] << ", "

<< prop.maxGridSize[2] << "]\n";

std::cout << " Max threads dim (block): ["

<< prop.maxThreadsDim[0] << ", "

<< prop.maxThreadsDim[1] << ", "

<< prop.maxThreadsDim[2] << "]\n";

std::cout << " Clock rate: " << prop.clockRate / 1000 << " MHz\n";

std::cout << " Memory Clock Rate: " << prop.memoryClockRate / 1000 << " MHz\n";

std::cout << " Memory Bus Width: " << prop.memoryBusWidth << " bits\n";

std::cout << " L2 Cache Size: " << prop.l2CacheSize << " bytes\n";

std::cout << " Registers per SM: " << prop.regsPerMultiprocessor << " registers\n";

std::cout << " Registers per Block: " << prop.regsPerBlock;

std::cout << " Registers per Block: " << prop.warpSize;

std::cout << std::endl;

}

Roofline Model

Roofline model helps us visualize the performance limitations of our GEMM kernels. I use the numbers for RTX 4090 below:

- Compute Bound (flat ceiling): Maximum FLOPS achievable (82.6 TFLOPS for FP32)

- Memory Bound (diagonal line): Performance limited by memory bandwidth (1,008 GB/s)

The transition point between memory-bound and compute-bound occurs at an arithmetic intensity of approximately 82 FLOP/byte. Modern optimized GEMM operations typically have high arithmetic intensity, making them compute-bound workloads.

Let’s look at some kernels now…

Naive Implementation

Concept

The simplest approach to calculate GEMM assigns each thread to compute one output element.

What does the memory access pattern look like in this naive case?

At a high level, each thread independently:

- Loads one row of $A$ (K elements)

- Loads one column of $B$ (K elements)

- Computes dot product

- Writes one element to $C$

Kernel

Here is a naive implementation for matrix multiply GEMM kernel:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

template <const uint block_size>

__global__ void sgemm_naive_kernel(int num_rows_a, int num_cols_b, int num_cols_a,

float alpha, const float *matrix_a,

const float *matrix_b, float beta, float *output_matrix)

{

// Map 1D thread ID to 2D output position

const int output_row = blockIdx.x * block_size + (threadIdx.x % block_size);

const int output_col = blockIdx.y * block_size + (threadIdx.x / block_size);

// Boundary check for non-multiple of block size

if (output_row < num_rows_a && output_col < num_cols_b)

{

float accumulator = 0.0f;

for (int k_idx = 0; k_idx < num_cols_a; ++k_idx)

{

accumulator += matrix_a[output_row * num_cols_a + k_idx] *

matrix_b[k_idx * num_cols_b + output_col];

}

// C = α*(A@B)+β*C

const int output_idx = output_row * num_cols_b + output_col;

output_matrix[output_idx] = alpha * accumulator + beta * output_matrix[output_idx];

}

}

Caller

We operate on torch Tensors directly to call the above kernel:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

namespace {

constexpr int ceil_div(int m, int n) {

return (m + n - 1) / n;

}

}

void sgemm_naive(const torch::Tensor &matrix_a, const torch::Tensor &matrix_b,

torch::Tensor &output_matrix, float alpha, float beta)

{

// Validate inputs

TORCH_CHECK(matrix_a.device().is_cuda(), "Matrix A must be on CUDA device");

TORCH_CHECK(matrix_b.device().is_cuda(), "Matrix B must be on CUDA device");

TORCH_CHECK(matrix_a.dtype() == torch::kFloat32, "Matrix A must be float32");

TORCH_CHECK(matrix_b.dtype() == torch::kFloat32, "Matrix B must be float32");

TORCH_CHECK(matrix_a.dim() == 2, "Matrix A must be 2D");

TORCH_CHECK(matrix_b.dim() == 2, "Matrix B must be 2D");

const int num_rows_a = static_cast<int>(matrix_a.size(0));

const int num_cols_a = static_cast<int>(matrix_a.size(1));

const int num_cols_b = static_cast<int>(matrix_b.size(1));

TORCH_CHECK(matrix_b.size(0) == num_cols_a, "Matrix dimensions must match: A is MxK, B must be KxN");

TORCH_CHECK(output_matrix.device().is_cuda(), "Matrix C must be on CUDA device");

TORCH_CHECK(output_matrix.dtype() == torch::kFloat32, "Matrix C must be float32");

TORCH_CHECK(output_matrix.size(0) == num_rows_a && output_matrix.size(1) == num_cols_b, "Matrix C must be MxN");

// Get raw device pointers

const float *d_matrix_a = matrix_a.data_ptr<float>();

const float *d_matrix_b = matrix_b.data_ptr<float>();

float *d_output_matrix = output_matrix.data_ptr<float>();

// Configure kernel launch: 1D blocks with block_size^2 threads (32x32 = 1024 threads per block)

constexpr uint block_size = 32;

dim3 block_dim(block_size * block_size);

dim3 grid_dim(ceil_div(num_rows_a, block_size),

ceil_div(num_cols_b, block_size));

// Launch kernel

sgemm_naive_kernel<block_size><<<grid_dim, block_dim>>>(

num_rows_a, num_cols_b, num_cols_a,

alpha, d_matrix_a, d_matrix_b, beta, d_output_matrix);

}

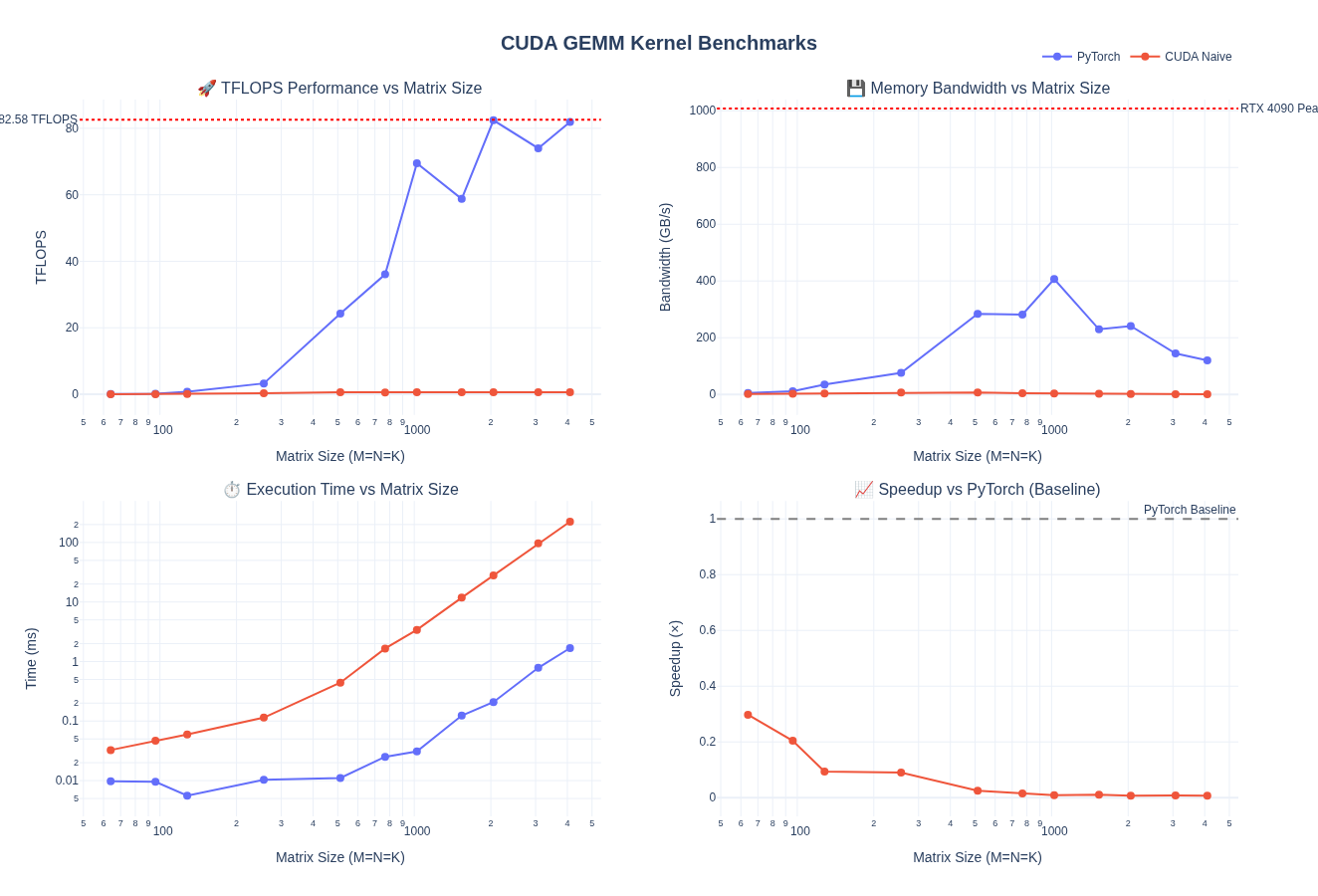

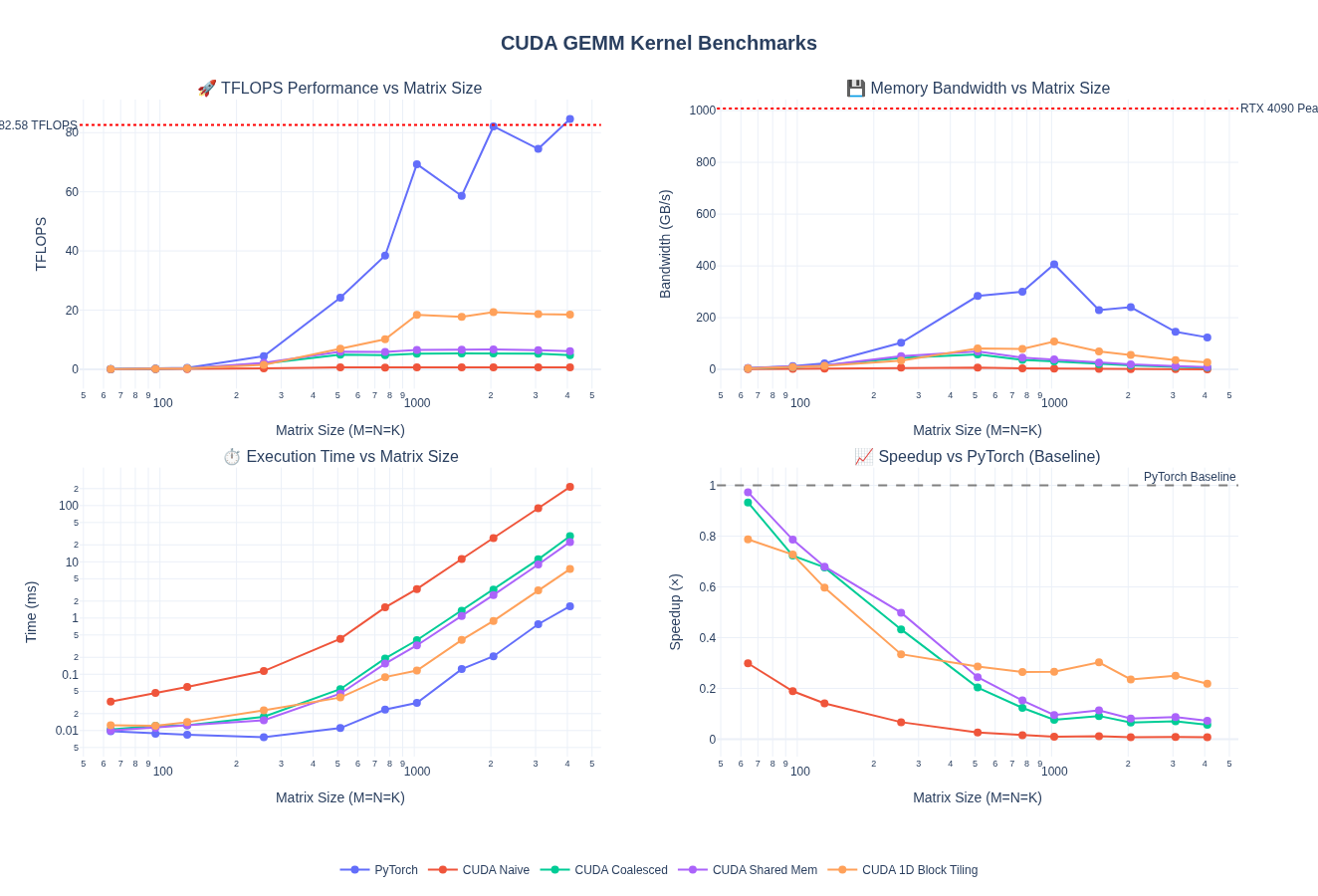

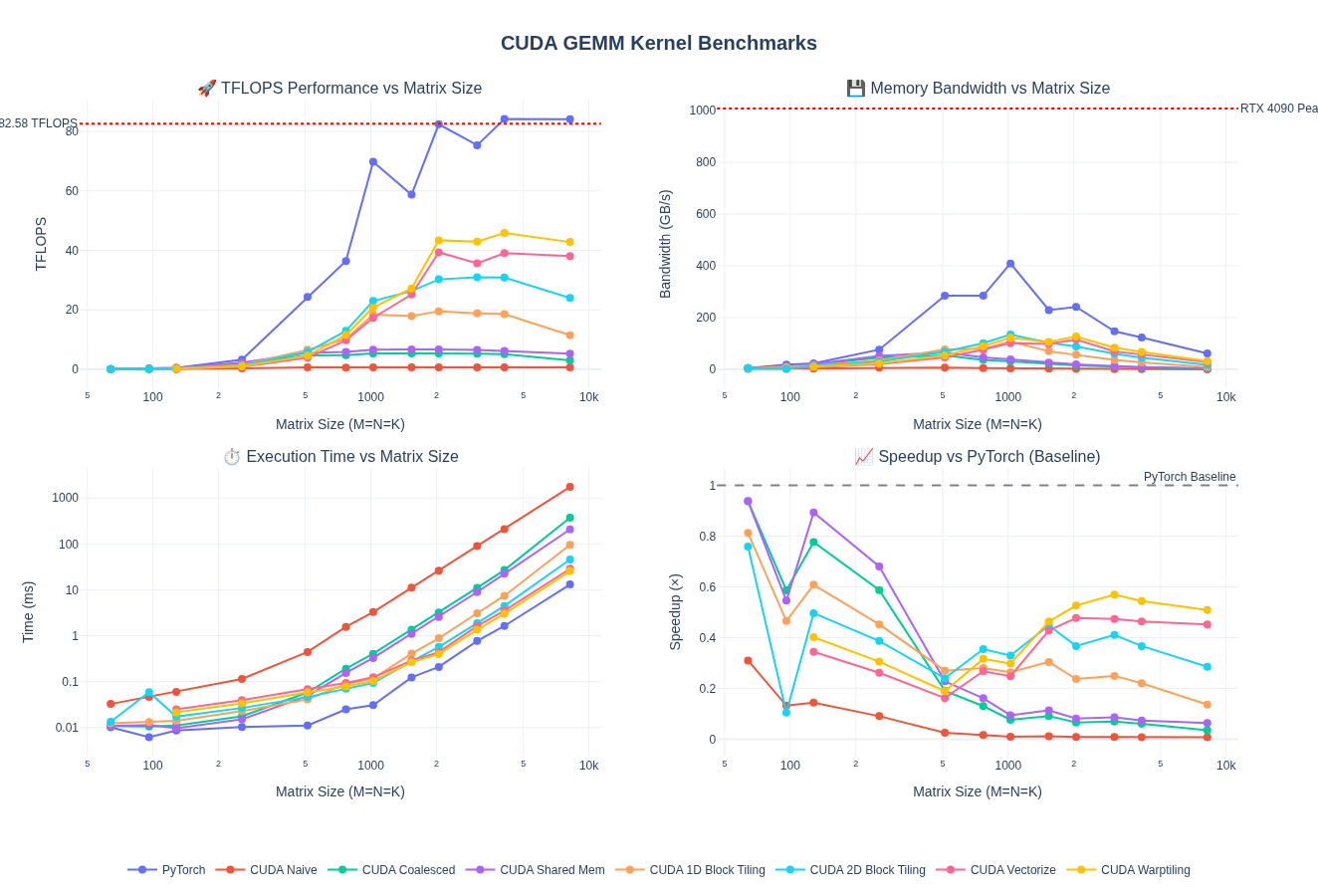

Performance Analysis

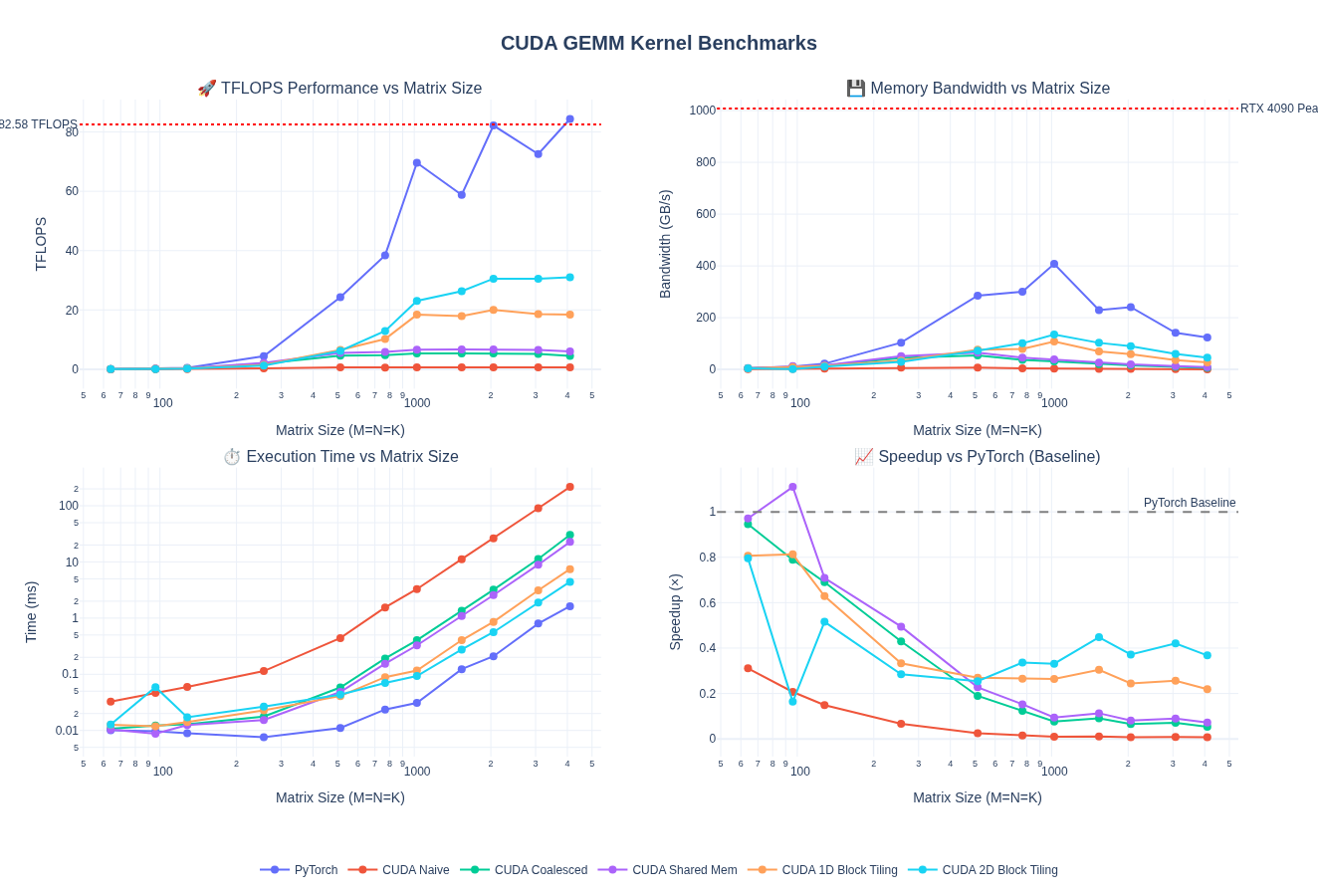

Below are the full benchmark results comparing the naive CUDA kernel against PyTorch’s optimized GEMM implementation across different shapes. As we can see, the naive implementation is significantly slower than PyTorch’s optimized kernel, achieving only ~1% of PyTorch’s performance.

For M = N = K = 4096:

- The naive CUDA kernel is 133× slower than PyTorch (0.01× speedup)

- Achieves only 0.76% of PyTorch’s TFLOPS

- Bandwidth utilization is 133× worse (0.90 GB/s vs 119.97 GB/s)

Global Memory Coalescing

Let’s take a brief digression before we look into why naive kernel is so slow.

Concept

Apart from PMPP book, slides from an NVidia GTC talk is a really good reference to understand the memory hierarchy details and why memory access patterns are the primary consideration when we think about GPU/CUDA performance. For recent architectures, CUTLASS/CUTE documentation is really good reference as well.

Some Basics

To understand memory coalescing, we need to first understand GPU execution hierarchy:

- Threads: Individual execution units in your CUDA kernel

- Warps: Groups of 32 threads that execute the same instruction simultaneously (SIMT - Single Instruction, Multiple Thread)

- Thread Blocks: Logical groupings of threads (up to 1024 threads) that share resources and can synchronize

- Streaming Multiprocessors (SMs): The physical processors on the GPU that execute thread blocks

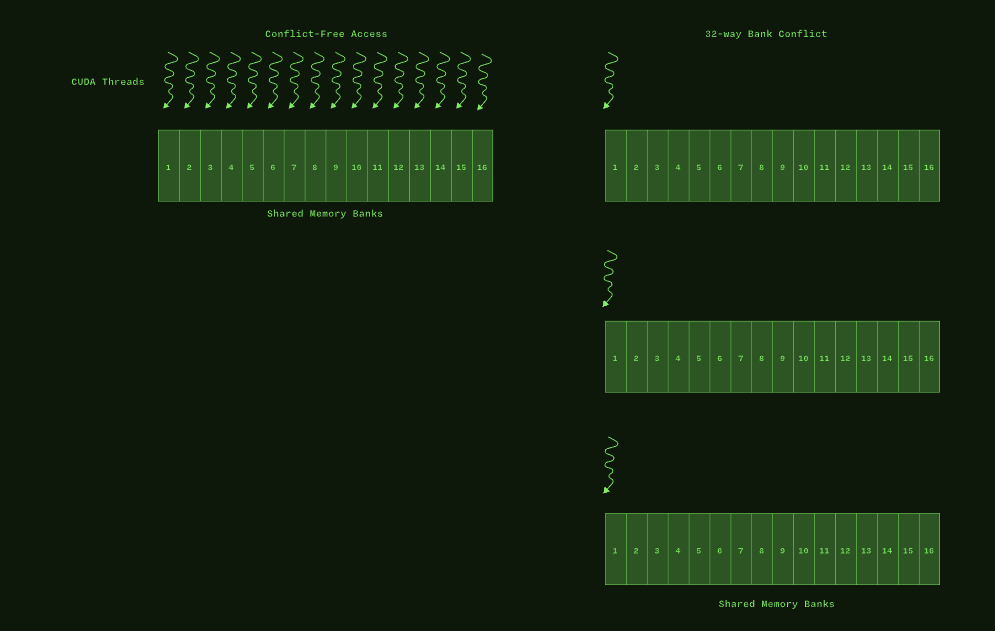

Warps are the fundamental unit of execution such that all 32 threads in a warp execute the same instruction at the same time. Also, when these threads access consecutive memory addresses, the hardware can combine their memory requests into a single transaction. Modern GPU DRAM systems can fetch large contiguous blocks (32B, 64B, or 128B cache lines) in one transaction. Without coalescing, the same 32 accesses could require 32 separate transactions.

In addition, other things to note about SMs include:

- Each SM has limited resources (registers, shared memory)

- Multiple thread blocks compete for these resources

- SMs can switch between warps in a single clock cycle, enabling latency hiding. While one warp waits for memory, another executes

- GEMM efficiency depends on keeping all warp schedulers busy with coalesced memory access patterns

Why is Naive Kernel Slow?

Let’s add some debug info to see what threads map to what values on A, B

1

2

3

4

5

6

7

+ if (threadIdx.x < 64)

+ {

+ printf("Thread %d ; Warp %d: Multiplying A[%d][%d] * B[%d][%d] = C[%d][%d]\n",

+ threadIdx.x, threadIdx.x / 32, output_row, k_idx,

+ k_idx, output_col,

+ output_row, output_col);

+ }

1

2

3

4

5

6

7

8

9

10

Thread 0 ; Warp 0: Multiplying A[0][0] * B[0][0] = C[0][0]

Thread 1 ; Warp 0: Multiplying A[1][0] * B[0][0] = C[1][0]

...

Thread 30 ; Warp 0: Multiplying A[30][0] * B[0][0] = C[30][0]

Thread 31 ; Warp 0: Multiplying A[31][0] * B[0][0] = C[31][0]

Thread 32 ; Warp 1: Multiplying A[0][0] * B[0][1] = C[0][1]

Thread 33 ; Warp 1: Multiplying A[1][0] * B[0][1] = C[1][1]

...

Thread 62 ; Warp 1: Multiplying A[30][0] * B[0][1] = C[30][1]

Thread 63 ; Warp 1: Multiplying A[31][0] * B[0][1] = C[31][1]

We can see that the naive kernel’s memory access pattern is inefficient. For each thread in a warp, it is accessing A[k][0] values where k is the thread id in a warp. When threads in a warp access scattered memory locations, each access requires a separate memory transaction. It is solvable by memory coalescing such that we restructure the thread-to-output mapping so that threads in the same warp access consecutive memory locations, enabling the hardware to combine multiple accesses into a single transaction.

Problem: Threads access memory in a scattered, non-coalesced pattern.

Turns out the only change we need is to swap % and / across row and col calculations for C.

1

2

3

4

5

// Map 1D thread ID to 2D output position for coalesced memory access

// *** KEY CHANGE wrt NAIVE kernel

const int output_row = blockIdx.x * block_size + (threadIdx.x / block_size);

const int output_col = blockIdx.y * block_size + (threadIdx.x % block_size);

// *** KEY CHANGE wrt NAIVE kernel

Memory Access Visualization

Let’s visualize how the coalesced kernel accesses memory during matrix multiplication. Notice how threads in the same warp now access the same row of matrix A, enabling memory coalescing. We will take a simple example with block_size=4:

Kernel

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

template <const uint block_size>

__global__ void sgemm_global_mem_coalesce_kernel(int num_rows_a, int num_cols_b, int num_cols_a,

float alpha, const float *matrix_a,

const float *matrix_b, float beta, float *matrix_c)

{

// Map 1D thread ID to 2D output position for coalesced memory access

// *** KEY CHANGE wrt NAIVE kernel

const int output_row = blockIdx.x * block_size + (threadIdx.x / block_size);

const int output_col = blockIdx.y * block_size + (threadIdx.x % block_size);

// *** KEY CHANGE wrt NAIVE kernel

// Boundary check for non-multiple of block size

if (output_row < num_rows_a && output_col < num_cols_b)

{

float accumulator = 0.0f;

for (int k_idx = 0; k_idx < num_cols_a; ++k_idx)

{

accumulator += matrix_a[output_row * num_cols_a + k_idx] *

matrix_b[k_idx * num_cols_b + output_col];

}

const int output_idx = output_row * num_cols_b + output_col;

matrix_c[output_idx] = alpha * accumulator + beta * matrix_c[output_idx];

}

}

The key change from the naive kernel:

Threads with consecutive

threadIdx.xnow access consecutive elements in the same row of A, enabling coalescing. I have removed the calling wrapper since it is the same as the last kernel and for brevity.

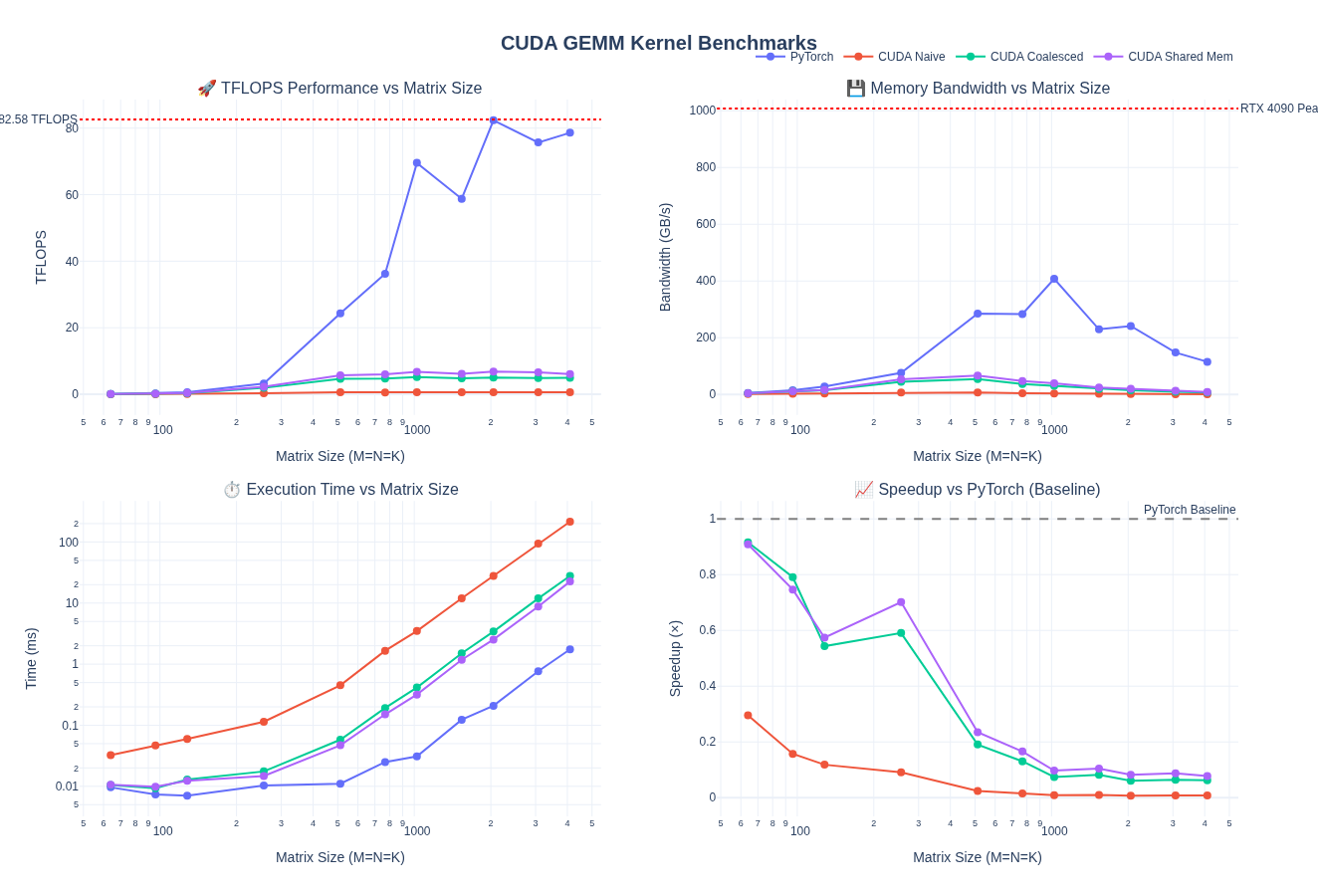

Performance Analysis

Below are the full benchmark results comparing the naive + coallesced memory access CUDA kernel against PyTorch’s optimized GEMM implementation across different shapes:

Just like the naive version, we ran a benchmark for N = M = K = 4096 to get the FLOPs and memory bandwidth numbers.

Throughput:

- 7.63× TFLOPS improvement (0.62 → 4.73 TFLOPS)

- Still only 5.8% of PyTorch’s performance, but a significant step up

Bandwidth:

- 7.71× bandwidth improvement (0.90 → 6.94 GB/s)

- Better memory utilization through coalesced access patterns

We can see that performance improved but still way slower than the pytorch version.

Shared Memory Caching

Concept

Before diving into shared memory optimization, let’s understand the RTX 4090’s memory hierarchy and why shared memory is so critical for performance. Shared memory provides significant bandwidth advantage compared to global memory, since it is on-chip. As a result, latency of loads/stores are at least one order of magnitude better compared to global memory. I could not find any public numbers on the differences though.

Even with coalesced access, both the naive and coalesced kernels repeatedly read the same data from global memory:

- Each element of matrix $A$ is read $N$ times (once per column of $B$)

- Each element of matrix $B$ is read $M$ times (once per row of $A$)

Using the Shared Memory

RTX 4090 has 128 KB of shared memory per SM (16MB / 128 SMs) that serves as a fast on-chip cache/shared memory. This shared memory is partitioned among thread blocks (each block gets its own chunk), accessible by all threads within a block. With 128 SMs on the RTX 4090, there’s a total of 16.4 MB of shared memory distributed across the chip. Instead of repeatedly reading the same data from slow global memory, as an optimization strategy, we can load tiles (chunks) of matrices A and B into this fast shared memory, compute partial results using the cached tile data with high reuse across multiple threads, then slide these tiles across the matrices to compute the final result, effectively transforming a bandwidth-bound problem into a compute-bound one. Let’s look at how this works below:

NOTE: We need to synchronize threads after both loading the data into shared memory and also after finishing the tiled matmuls to ensure:

All data is loaded before we do the matmul calculations

All calculated data is stored back into matrix C before going to next tiles

Kernel

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

template <const uint block_size>

__global__ void sgemm_shared_mem_kernel(int num_rows_a, int num_cols_b, int num_cols_a,

float alpha, const float *matrix_a,

const float *matrix_b, float beta,

float *matrix_c)

{

const uint block_row = blockIdx.x;

const uint block_col = blockIdx.y;

__shared__ float tile_a[block_size * block_size];

__shared__ float tile_b[block_size * block_size];

const uint thread_row = threadIdx.x / block_size;

const uint thread_col = threadIdx.x % block_size;

// Calculate global row and column indices for this thread

const uint global_row = block_row * block_size + thread_row;

const uint global_col = block_col * block_size + thread_col;

// Move pointers to the starting position for this block

matrix_a += block_row * block_size * num_cols_a; // row=block_row, col=0

matrix_b += block_col * block_size; // row=0, col=block_col

matrix_c += block_row * block_size * num_cols_b + block_col * block_size;

float accumulator = 0.0f;

// Loop over all tiles along K dimension

for (int tile_idx = 0; tile_idx < num_cols_a; tile_idx += block_size)

{

// Load tile from matrix A into shared memory with bounds checking

// thread_col is consecutive for coalesced memory access

if (global_row < num_rows_a && (tile_idx + thread_col) < num_cols_a)

{

tile_a[thread_row * block_size + thread_col] =

matrix_a[thread_row * num_cols_a + thread_col];

}

else

{

tile_a[thread_row * block_size + thread_col] = 0.0f;

}

// Load tile from matrix B into shared memory with bounds checking

// thread_col is consecutive for coalesced memory access

if ((tile_idx + thread_row) < num_cols_a && global_col < num_cols_b)

{

tile_b[thread_row * block_size + thread_col] =

matrix_b[thread_row * num_cols_b + thread_col];

}

else

{

tile_b[thread_row * block_size + thread_col] = 0.0f;

}

// Block threads until cache is fully populated

__syncthreads();

// Advance pointers to next tile

matrix_a += block_size;

matrix_b += block_size * num_cols_b;

// Compute partial dot product using shared memory

for (int dot_idx = 0; dot_idx < block_size; ++dot_idx)

{

accumulator += tile_a[thread_row * block_size + dot_idx] *

tile_b[dot_idx * block_size + thread_col];

}

// Sync again to avoid faster threads fetching next block before slower threads finish

__syncthreads();

}

// Write result to global memory with bounds checking: C = α*(A@B)+β*C

if (global_row < num_rows_a && global_col < num_cols_b)

{

matrix_c[thread_row * num_cols_b + thread_col] =

alpha * accumulator + beta * matrix_c[thread_row * num_cols_b + thread_col];

}

}

Again, we have removed the caller code since it looks similar to previous kernels

Performance Analysis

Below are all the results from the benchmarking:

Running the shared memory kernel for M = N = K = 4096:

Performance Improvement:

- 1.24× TFLOPS improvement over coalesced (4.94 → 6.10 TFLOPS)

- 1.24× bandwidth improvement (7.23 → 8.94 GB/s)

- 9.5× faster than naive (0.64 → 6.10 TFLOPS)

- Still only 7.8% of PyTorch’s performance

Key Insight:

- Shared memory caching provides modest improvement (~24%) over just coalescing

- The relatively small gain suggests we’re not yet effectively hiding memory latency

Some improvement but still far behind the baseline performance.

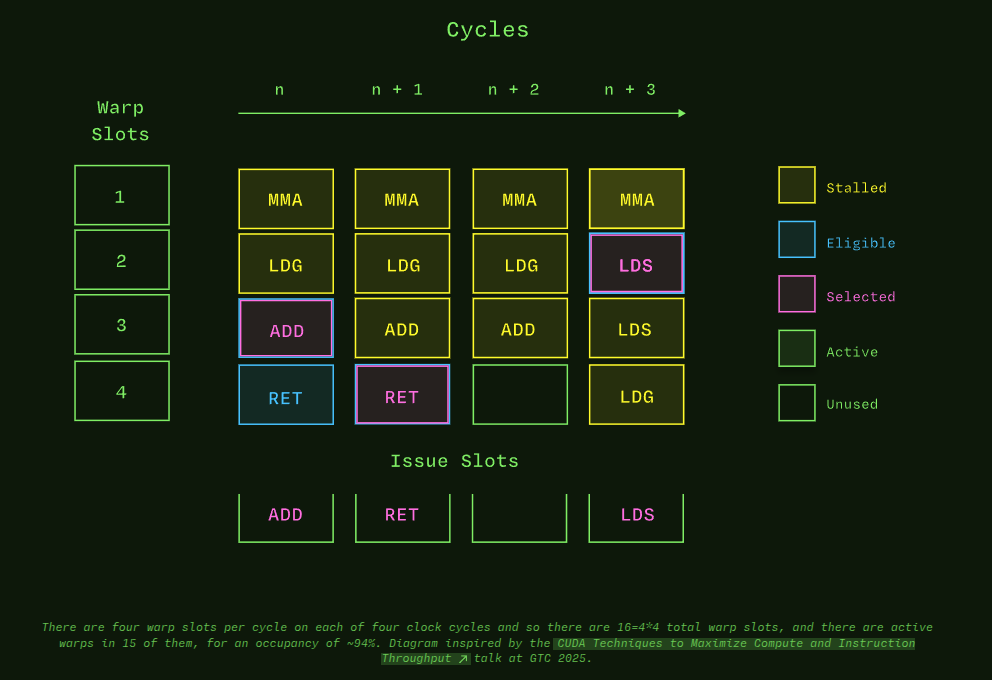

Understanding GPU Occupancy

Before diving into more advanced optimizations, we need to understand occupancy, which determines how well we utilize the GPU’s resources.

What is Occupancy?

Occupancy is the ratio of active warps to the maximum number of possible warps per SM:

\[\text{Occupancy} = \frac{\text{Active Warps per SM}}{\text{Maximum Warps per SM}}\]Here is a really nice diagram and description from Modal GPU Glossary

GPUs hide memory latency through massive parallelism. When one warp waits for memory, the SM immediately switches to execute another warp. Occupancy does not enable performance directly but primarily through latency hiding. Higher occupancy effectively means more warps available for compute and we can hide latency more effectively. If occupancy is low, hardware sits idle waiting for data. However, occupancy is not everything. A kernel with 100% occupancy but poor memory access patterns can still perform poorly since that could lead to lower register/shared memory per thread (covered later). So, it is important to take into account other factors apart from just optimizing for occupancy.

Key Considerations

Now, each thread gets registers from the SM’s register file. More registers per thread implies fewer concurrent threads.

\[\text{Max Threads} = \min\left(\frac{65536 \text{ registers/SM}}{\text{registers per thread}}, 1536\right)\]| Registers/Thread | Max Threads | Active Warps | Occupancy |

|---|---|---|---|

| 32 | 1,536 (limited by hardware max) | 48 | 100% |

| 64 | 1,024 | 32 | 66.7% |

| 128 | 512 | 16 | 33.3% |

In addition, shared memory is partitioned among thread blocks on the same SM.

\[\text{Max Blocks} = \min\left(\frac{102400 \text{ bytes/SM}}{\text{shared memory per block}}, \underbrace{32}_{\text{hardware limit}}\right)\]Note: 32 is maximum resident blocks per SM (hardware limit for RTX 4090)

| Shared Memory/Block | Max Blocks | Notes |

|---|---|---|

| 0 KB | 32 | No shared memory usage |

| 24 KB | 4 | Good for moderate tiling |

| 48 KB (max for RTX 4090) | 2 | Large tiles, fewer blocks |

Lastly, number of threads per block affects how many blocks can fit on an SM and hence affecting occupancy.

| Threads/Block | Warps/Block | Max Blocks/SM | Active Warps | Occupancy |

|---|---|---|---|---|

| 128 | 4 | 12 | 48 | 100% |

| 256 | 8 | 6 | 48 | 100% |

| 512 | 16 | 3 | 48 | 100% |

| 1024 | 32 | 1 | 32 | 66.7% |

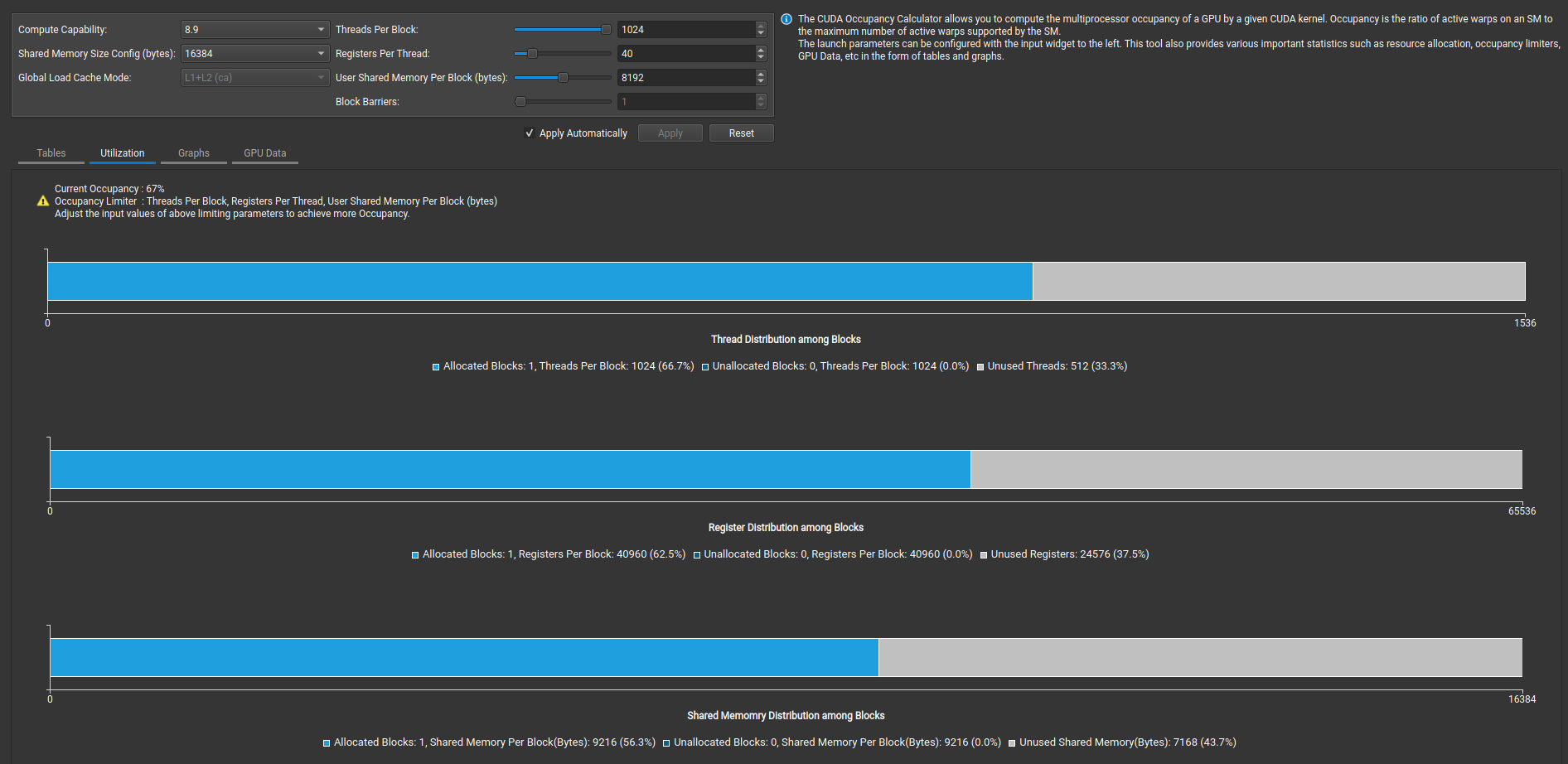

Occupancy for Our Shared Memory Kernel

Let’s analyze our current shared memory kernel:

1

2

constexpr uint BLOCKSIZE = 32;

dim3 block_dim(BLOCKSIZE * BLOCKSIZE); // 1024 threads per block

Shared memory usage:

1

2

3

__shared__ float tile_a[32 * 32]; // 4 KB

__shared__ float tile_b[32 * 32]; // 4 KB

// Total: 8 KB per block

Occupancy calculation:

- Threads per block: 1,024 threads (32 warps)

- Blocks per SM (thread limit): $\lfloor 1536 / 1024 \rfloor = 1$ block

- Blocks per SM (shared memory limit): $\lfloor 102400 / 8192 \rfloor = 12$ blocks

- Blocks per SM (block limit): 32 blocks (max)

- Actual blocks per SM: $\min(1, 12, 32) = 1$ block

Active warps: $1 \text{ block} \times 32 \text{ warps/block} = 32 \text{ warps}$

Occupancy: $\frac{32}{48} = 66.7\%$

Problem: Our large block size (1,024 threads) limits us to only 1 block per SM, resulting in just 66.7% occupancy. 66.7% occupancy is not necessarily bad but let’s see more details using nsight-compute on how to improve.

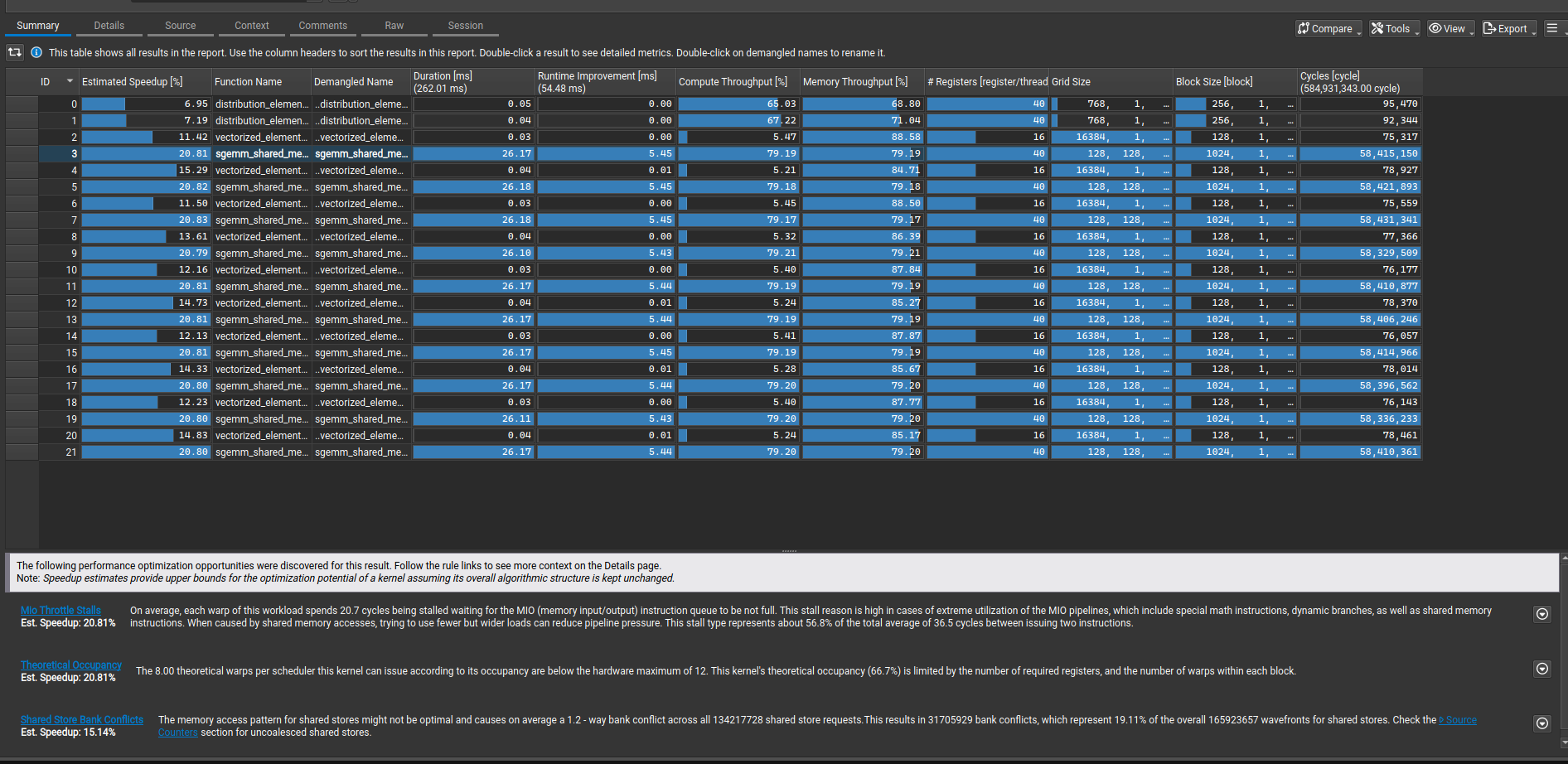

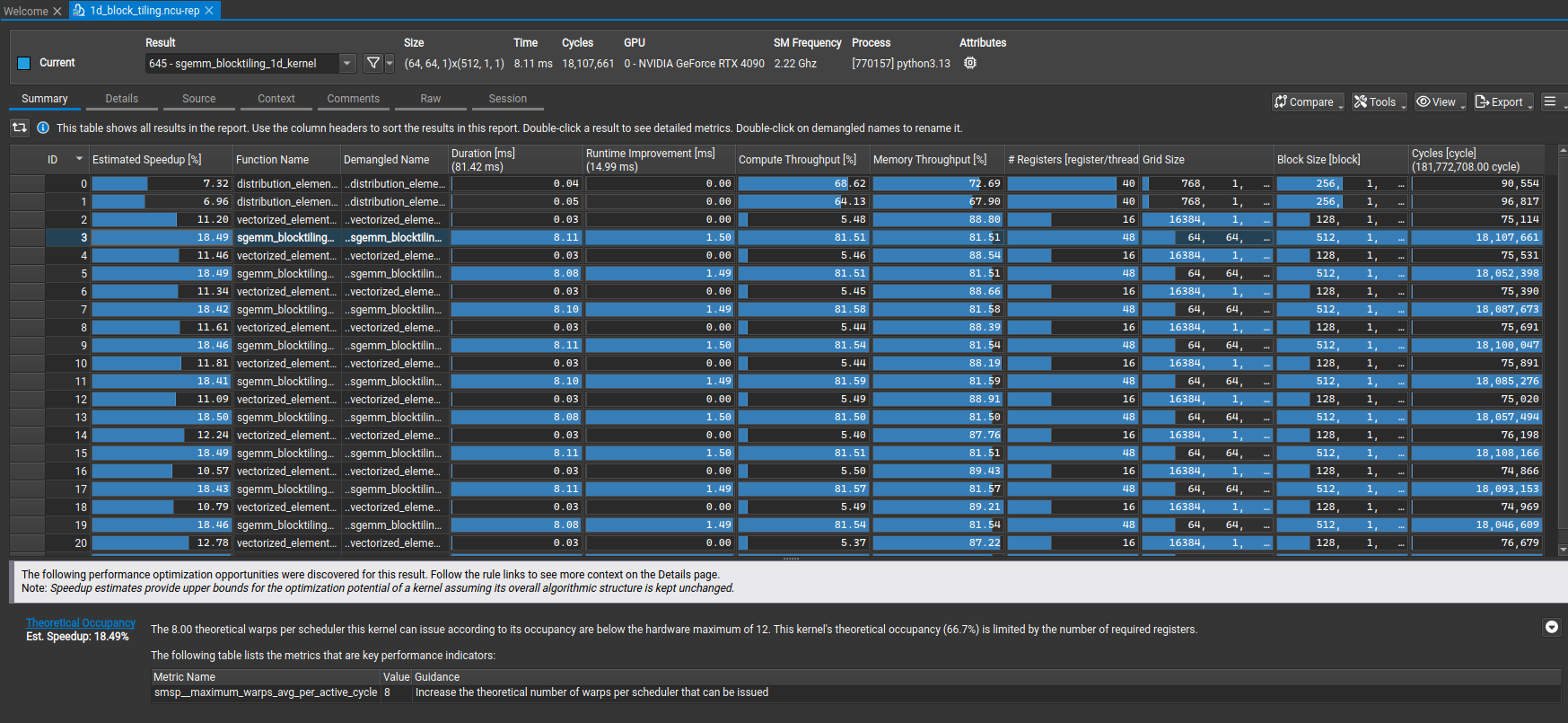

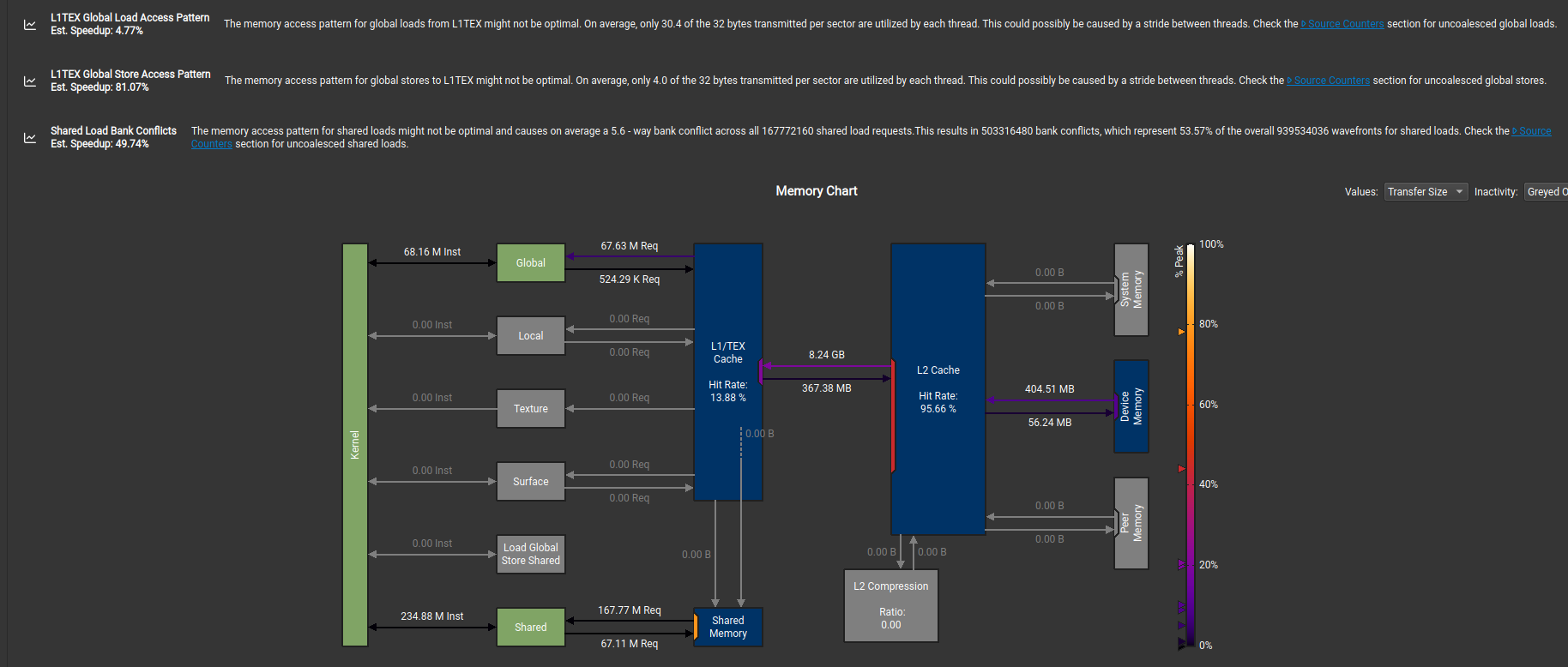

Nsight Compute Profiling

First let’s confirm our occupancy calculation and it matches.

Now, looking at the summary - it provides some ideas on why the kernel is still slow:

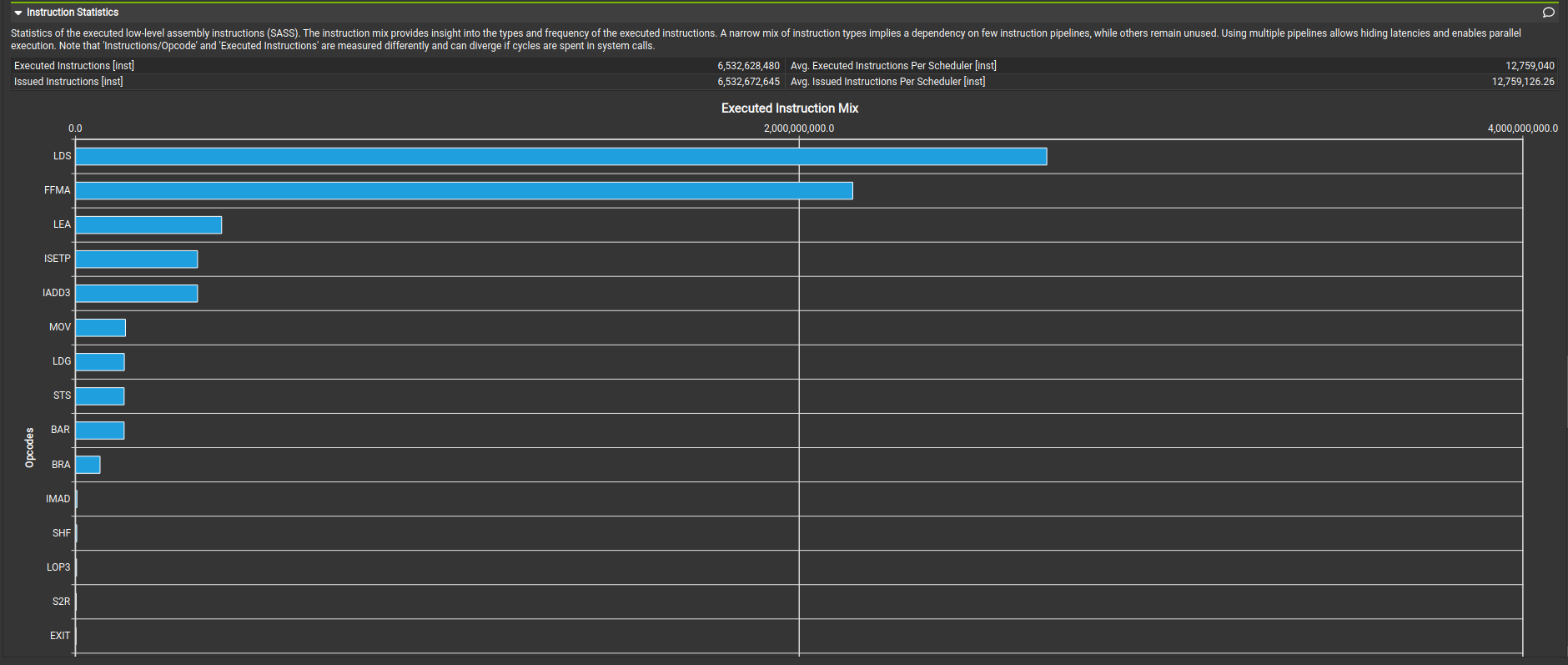

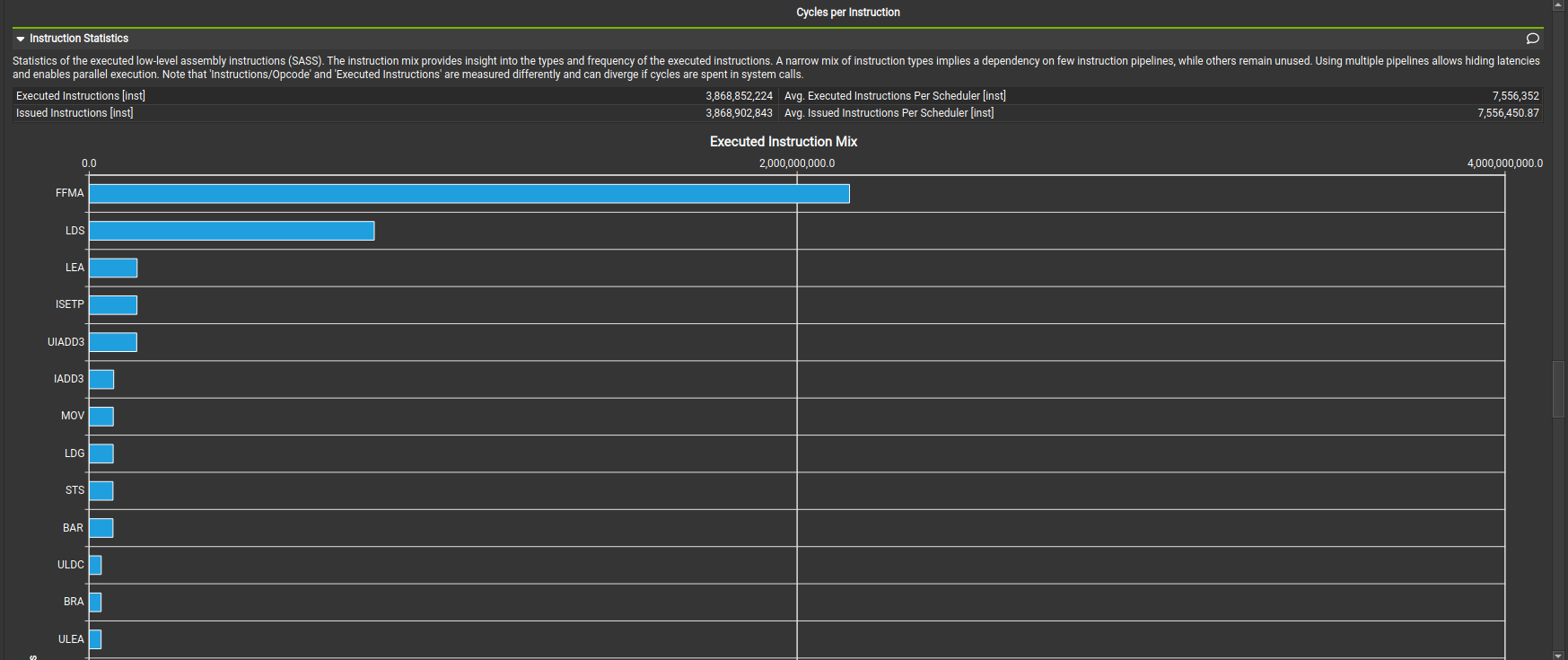

Next, looking at the instruction mix, we can see that LDS dominates the instruction mix, LDS = load within shared memory window, which is not good.

So, next we will focus on how to reduce the LDS instructions in our kernel.

1D Block Tiling

Concept

In the previous kernel, each thread was computing a single output element of matrix C, meaning each thread needed to load elements from shared memory repeatedly, with memory accesses dominating the execution time.

Next, instead of each thread computing exactly one output element of the tile, each thread computes multiple output elements along one dimension. To support this, we fetch/cache some data from SMEM into registers (for reuse) within each thread, reducing repeated SMEM loads.

In essence, we are trying to improve the arithmetic intensity of the kernel, which effectively means computing more results per thread with the same loaded data i.e. increase FLOPS/byte

To accomplish this, we introduce TM accumulators for TM outputs per thread i.e.

1

float thread_results[TM] = {0.0f};

and later we calculate TM outputs per thread

1

2

3

4

5

6

7

8

for (uint dot_idx = 0; dot_idx < BK; ++dot_idx) {

float b_tmp = tile_b[dot_idx * BN + thread_col];

for (uint res_idx = 0; res_idx < TM; ++res_idx) {

thread_results[res_idx] +=

tile_a[(thread_row * TM + res_idx) * BK + dot_idx] * b_tmp;

}

}

Let’s look at a visualization:

Kernel

We now evolve our kernel to introduce paramters that we will need later. We expand on the existing block_size to make them configurable such as BM, BN, BK, etc. They represent the block sizes we are operating on for M, N, and K dimensions. In addition, we add TM as a parameter that determines, how many values calculated per thread! Full listing below:

NOTE: Simon Boehm’s Kernels did not handle bounds check i.e. non-block multiple kernels will be incorrect. I tried to add the bounds check here but we can see that it starts to make the code fairly complex. We will keep the bounds check as long as we can but will drop it later sections to focus on core concepts.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

template <const int BM, const int BN, const int BK, const int TM>

__global__ void sgemm_blocktiling_1d_kernel(int num_rows_a, int num_cols_b, int num_cols_a,

float alpha, const float *matrix_a,

const float *matrix_b, float beta,

float *matrix_c)

{

const uint block_row = blockIdx.x;

const uint block_col = blockIdx.y;

__shared__ float tile_a[BM * BK];

__shared__ float tile_b[BK * BN];

const uint thread_row = threadIdx.x / BN;

const uint thread_col = threadIdx.x % BN;

// Calculate global row and column indices for this thread

const int global_row = block_row * BM + thread_row * TM;

const int global_col = block_col * BN + thread_col;

// Move pointers to the starting position for this block

matrix_a += block_row * BM * num_cols_a; // row=block_row, col=0

matrix_b += block_col * BN; // row=0, col=block_col

matrix_c += block_row * BM * num_cols_b + block_col * BN;

// Allocate thread-local cache for results in registerfile

float thread_results[TM] = {0.0f};

// Loop over all tiles along K dimension

for (int tile_idx = 0; tile_idx < num_cols_a; tile_idx += BK)

{

// Load tile from matrix A into shared memory with bounds checking

// Each thread loads one element from A

const uint a_row = threadIdx.x / BK;

const uint a_col = threadIdx.x % BK;

if ((block_row * BM + a_row) < num_rows_a && (tile_idx + a_col) < num_cols_a) {

tile_a[a_row * BK + a_col] = matrix_a[a_row * num_cols_a + a_col];

} else {

tile_a[a_row * BK + a_col] = 0.0f;

}

// Load tile from matrix B into shared memory with bounds checking

// Each thread loads one element from B

const uint b_row = threadIdx.x / BN;

const uint b_col = threadIdx.x % BN;

if ((tile_idx + b_row) < num_cols_a && (block_col * BN + b_col) < num_cols_b) {

tile_b[b_row * BN + b_col] = matrix_b[b_row * num_cols_b + b_col];

} else {

tile_b[b_row * BN + b_col] = 0.0f;

}

__syncthreads();

// Advance pointers to next tile

matrix_a += BK;

matrix_b += BK * num_cols_b;

// Calculate per-thread results

for (uint dot_idx = 0; dot_idx < BK; ++dot_idx) {

// We make the dotproduct loop the outside loop, which facilitates

// reuse of the tile_b entry, which we can cache in a tmp var.

float b_tmp = tile_b[dot_idx * BN + thread_col];

for (uint res_idx = 0; res_idx < TM; ++res_idx) {

thread_results[res_idx] +=

tile_a[(thread_row * TM + res_idx) * BK + dot_idx] * b_tmp;

}

}

__syncthreads();

}

// Write results to global memory: C = α*(A@B)+β*C

for (uint res_idx = 0; res_idx < TM; ++res_idx) {

int row = global_row + res_idx;

if (row < num_rows_a && global_col < num_cols_b) {

matrix_c[(thread_row * TM + res_idx) * num_cols_b + thread_col] =

alpha * thread_results[res_idx] +

beta * matrix_c[(thread_row * TM + res_idx) * num_cols_b + thread_col];

}

}

}

Caller

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

void sgemm_blocktiling_1d(const torch::Tensor &matrix_a, const torch::Tensor &matrix_b,

torch::Tensor &output_matrix, float alpha, float beta)

{

// ... rest of the code is similar

// ...

// Template parameters for kernel

constexpr int BM = 64;

constexpr int BN = 64;

constexpr int BK = 8;

constexpr int TM = 8;

// Configure kernel launch

// Tiling strategy :

// - BM x BK from A, BK x BN from B

// - TM values per thread

// Number of threads = (BM / TM) * BN = (64 / 8) * 64 = 512 threads per block

dim3 block_dim((BM / TM) * BN);

dim3 grid_dim(CEIL_DIV(num_rows_a, BM),

CEIL_DIV(num_cols_b, BN));

// Launch kernel

sgemm_blocktiling_1d_kernel<BM, BN, BK, TM><<<grid_dim, block_dim>>>(

num_rows_a, num_cols_b, num_cols_a,

alpha, d_matrix_a, d_matrix_b, beta, d_output_matrix);

}

Performance Analysis

Let’s look at overall performance across several batch sizes

Performance Improvement:

- 3.03× TFLOPS improvement over shared memory (6.10 → 18.49 TFLOPS)

- 3.03× bandwidth improvement (8.94 → 27.08 GB/s)

- 28.9× faster than naive (0.64 → 18.49 TFLOPS)

- Achieved 21.8% of PyTorch’s performance (84.62 TFLOPS)

Key Insight:

- Register-level tiling provides 3× improvement by increasing arithmetic intensity

- By caching

b_tmpin registers and reusing it TM times, we reduce shared memory traffic- Each thread now computes TM=8 outputs instead of 1, amortizing memory access costs

Comparison vs Previous Kernels:

| Kernel | Time (ms) | TFLOPS | vs PyTorch | Speedup over Naive |

|---|---|---|---|---|

| Naive | 214.24 | 0.64 | 0.8% | 1.0× |

| Coalesced | 28.62 | 4.80 | 5.7% | 7.5× |

| Shared Memory | 22.52 | 6.10 | 7.2% | 9.5× |

| 1D Block Tiling | 7.43 | 18.49 | 21.8% | 28.9× |

| PyTorch | 1.62 | 84.62 | 100% | 132.1× |

NCU profiling

Now, let’s look at how things have changed and confirm our results using ncu!

NCU Summary Stats

We can see the warning related to MIO Throttle Stalls and Shared Store Bank Conflicts are gone now

NCU Instruction Mix

LDS is no longer the majority of instruction mix.

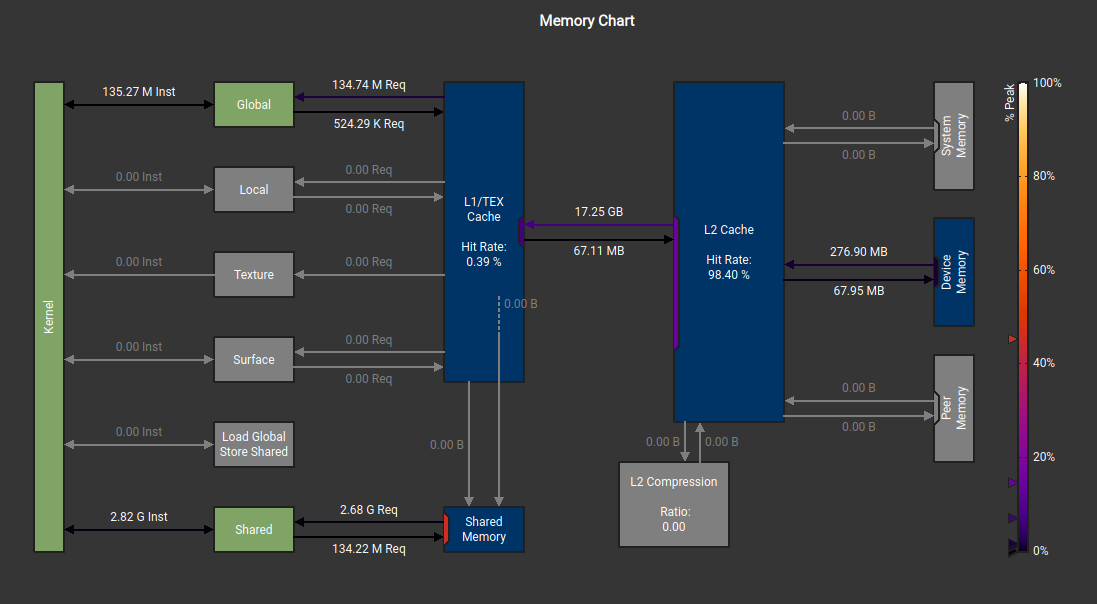

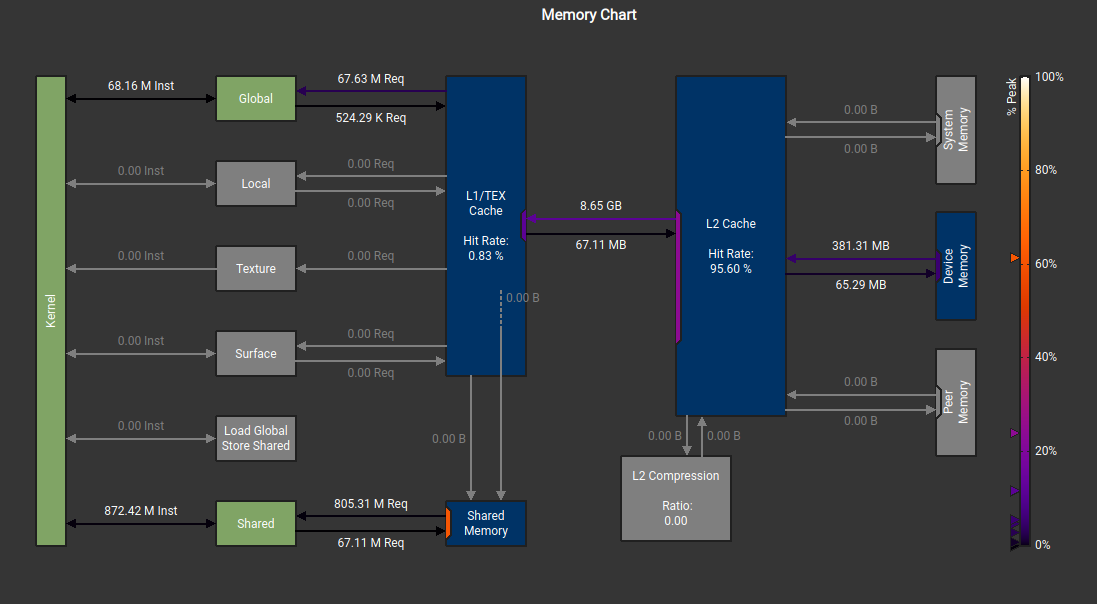

Memory Charts Comparison

The shared memory bandwidth used is also showed in the memory charts. See the comparison below:

Key takeaway from 1D blocktiling approach is as we calculate more values per thread, we reduce the number of loads/stores per result i.e. increase arithmetic intensity

2D Block Tiling

Concept

2D tiling is a natural extension and enables each thread to compute TM × TN outputs (e.g., 8 × 8 = 64 outputs) instead of just TM outputs (e.g., 8 outputs) in 1D tiling, resulting in TN× more computation per thread with more register usage. Of course, we would need to keep within the register memory bounds but this is how we can increase arithmetic intensity further by reusing shared memory. This creates bidirectional register reuse:

- Each value from A (loaded into

register_m[TM]) is reused across TN computations - Each value from B (loaded into

register_n[TN]) is reused across TM computations - This forms an “outer product” pattern at the register level

In the code, I implemented two separate kernels such that a Main Kernel (No Bounds Checking) handles all interior blocks where every memory access is guaranteed to be in-bounds, helping with zero thread divergence since all threads can execute the same logic. An Edge Kernel (With Bounds Checking) handles boundary blocks at the right edge, bottom edge, and corner. This structures the code well but the code is starting to look fairly complex at this point.

Kernel

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

template <const int BM, const int BN, const int BK, const int TM, const int TN>

__global__ void sgemm_blocktiling_2d_kernel(int num_rows_a, int num_cols_b, int num_cols_a,

float alpha, const float *matrix_a,

const float *matrix_b, float beta,

float *matrix_c)

{

const uint block_row = blockIdx.x;

const uint block_col = blockIdx.y;

__shared__ float tile_a[BM * BK];

__shared__ float tile_b[BK * BN];

const uint thread_row = threadIdx.x / (BN / TN);

const uint thread_col = threadIdx.x % (BN / TN);

const uint num_threads = (BM / TM) * (BN / TN);

matrix_a += block_row * BM * num_cols_a;

matrix_b += block_col * BN;

matrix_c += block_row * BM * num_cols_b + block_col * BN;

float thread_results[TM * TN] = {0.0f};

float register_m[TM] = {0.0f};

float register_n[TN] = {0.0f};

for (uint block_k_idx = 0; block_k_idx < num_cols_a; block_k_idx += BK)

{

#pragma unroll

for (uint load_offset = 0; load_offset < BM * BK; load_offset += num_threads)

{

uint load_idx = threadIdx.x + load_offset;

uint a_row = load_idx / BK;

uint a_col = load_idx % BK;

tile_a[load_idx] = matrix_a[a_row * num_cols_a + a_col];

}

#pragma unroll

for (uint load_offset = 0; load_offset < BK * BN; load_offset += num_threads)

{

uint load_idx = threadIdx.x + load_offset;

uint b_row = load_idx / BN;

uint b_col = load_idx % BN;

tile_b[load_idx] = matrix_b[b_row * num_cols_b + b_col];

}

__syncthreads();

matrix_a += BK;

matrix_b += BK * num_cols_b;

for (uint dot_idx = 0; dot_idx < BK; ++dot_idx)

{

for (uint i = 0; i < TM; ++i)

{

register_m[i] = tile_a[(thread_row * TM + i) * BK + dot_idx];

}

for (uint i = 0; i < TN; ++i)

{

register_n[i] = tile_b[dot_idx * BN + thread_col * TN + i];

}

// Each thread calculates TM x TN outputs

for (uint res_idx_m = 0; res_idx_m < TM; ++res_idx_m)

{

for (uint res_idx_n = 0; res_idx_n < TN; ++res_idx_n)

{

thread_results[res_idx_m * TN + res_idx_n] +=

register_m[res_idx_m] * register_n[res_idx_n];

}

}

}

__syncthreads();

}

#pragma unroll

for (uint res_idx_m = 0; res_idx_m < TM; ++res_idx_m)

{

#pragma unroll

for (uint res_idx_n = 0; res_idx_n < TN; ++res_idx_n)

{

const uint c_idx = (thread_row * TM + res_idx_m) * num_cols_b +

(thread_col * TN + res_idx_n);

matrix_c[c_idx] = alpha * thread_results[res_idx_m * TN + res_idx_n] +

beta * matrix_c[c_idx];

}

}

}

You will notice we are using #pragma unroll directives in the code. What does #pragma unroll do?

It is a compiler optimization technique that can, for example, replace a piece of code like

1

2

for (int i = 0; i < 5; i++ )

b[i] = i;

with

1

2

3

4

5

b[0] = 0;

b[1] = 1;

b[2] = 2;

b[3] = 3;

b[4] = 4;

by putting #pragma unroll directive right before the loop. I mostly used it since I found it in bunch of other example kernels I saw.

Caller

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

void sgemm_blocktiling_2d(const torch::Tensor &matrix_a, const torch::Tensor &matrix_b,

torch::Tensor &output_matrix, float alpha, float beta)

{

// ... same as previous kernels

constexpr int BM = 64;

constexpr int BN = 64;

constexpr int BK = 8;

constexpr int TM = 8;

constexpr int TN = 8;

// Number of threads launches as part of the block

// (64 / 8) * (64 / 8) = 64

dim3 block_dim((BM / TM) * (BN / TN));

const int num_blocks_m = ceil_div(num_rows_a, BM);

const int num_blocks_n = ceil_div(num_cols_b, BN);

const int main_blocks_m = num_rows_a / BM;

const int main_blocks_n = num_cols_b / BN;

if (main_blocks_m > 0 && main_blocks_n > 0)

{

dim3 main_grid(main_blocks_m, main_blocks_n);

sgemm_blocktiling_2d_kernel<BM, BN, BK, TM, TN><<<main_grid, block_dim>>>(

num_rows_a, num_cols_b, num_cols_a,

alpha, d_matrix_a, d_matrix_b, beta, d_output_matrix);

}

// ... handle edge kernels

}

Performance Analysis

Looking at the benchmark results for 4096×4096 matrices, the 2D block tiling kernel shows further performance gains:

Performance Improvement:

- 1.66× TFLOPS improvement over 1D block tiling (18.40 → 30.55 TFLOPS)

- 1.66× bandwidth improvement (26.96 → 44.75 GB/s)

- 38.7% of PyTorch’s performance (79.00 TFLOPS)

- 46.9× faster than naive (0.652 → 30.55 TFLOPS)

Comparison vs Previous Kernels (4096×4096):

| Kernel | Time (ms) | TFLOPS | Bandwidth (GB/s) | vs PyTorch | Speedup over Naive |

|---|---|---|---|---|---|

| Naive | 210.75 | 0.65 | 0.96 | 0.8% | 1.0× |

| Coalesced | 26.96 | 5.10 | 7.47 | 6.5% | 7.8× |

| Shared Memory | 22.67 | 6.06 | 8.88 | 7.7% | 9.3× |

| 1D Block Tiling | 7.47 | 18.40 | 26.96 | 23.3% | 28.2× |

| 2D Block Tiling | 4.50 | 30.55 | 44.75 | 38.7% | 46.9× |

| PyTorch | 1.74 | 79.00 | 115.73 | 100% | 121.2× |

Performance Across Matrix Sizes

As next steps, when we check our kernel in Nsight compute, we get more pointers to improve performance:

- L1TEX Global Store Access Pattern: The memory access pattern for global stores to L1TEX might not be optimal. On average, only 4.0 of the 32 bytes transmitted per sector are utilized by each thread. This could possibly be caused by a stride between threads. Check the Source Counters section for uncoalesced global stores.

- Shared Load Bank Conflicts: The memory access pattern for shared loads might not be optimal and causes on average a 5.6 - way bank conflict across all 167772160 shared load requests.This results in 503316480 bank conflicts, which represent 53.57% of the overall 939534036 wavefronts for shared loads. Check the Source Counters section for uncoalesced shared loads.

- Uncoalesced Shared Accesses: This kernel has uncoalesced shared accesses resulting in a total of 369098752 excessive wavefronts (37% of the total 1006632960 wavefronts). Check the L1 Wavefronts Shared Excessive table for the primary source locations. The CUDA Best Practices Guide has an example on optimizing shared memory accesses.

Vectorized Memory Access

Concept

As we have realized already, GEMMs are bottlenecked by memory transfer speed. Another optimization that can increase effective bandwidth utilization is to use vectorized memory operations.

CUDA provides built-in vector types (float2, float4, int2, int4) that enable loading or storing multiple values in a single instruction. Instead of issuing four separate 32-bit loads, a single float4 load can fetch 128 bits (16 bytes) at once.

Good reference for Vectorized Memory Access for Performance: CUDA Pro Tip: Increase Performance with Vectorized Memory Access

In our 2D block tiling kernel, each thread loads elements from shared memory to registers. Currently, these loads happen as individual 32-bit transactions:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

// Current approach: scalar loads

for (uint dot_idx = 0; dot_idx < BK; ++dot_idx) {

// Load TM elements from tile_a into registers

for (uint i = 0; i < TM; ++i) {

register_m[i] = tile_a[(thread_row * TM + i) * BK + dot_idx]; // 32-bit load

}

// Load TN elements from tile_b into registers

for (uint i = 0; i < TN; ++i) {

register_n[i] = tile_b[dot_idx * BN + thread_col * TN + i]; // 32-bit load

}

// ... compute outer product

}

If TM=8, we’re issuing 8 separate load instructions. With vectorization, we can combine these into 2 loads of float4. Let’s see how this works:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

// Vectorized approach: load 4 elements at once

for (uint dot_idx = 0; dot_idx < BK; ++dot_idx) {

// Load 8 elements from tile_a using 2× float4 instead of 8× float

*reinterpret_cast<float4*>(®ister_m[0]) =

*reinterpret_cast<const float4*>(&tile_a[(thread_row * TM) * BK + dot_idx]);

*reinterpret_cast<float4*>(®ister_m[4]) =

*reinterpret_cast<const float4*>(&tile_a[(thread_row * TM + 4) * BK + dot_idx]);

// Load 8 elements from tile_b using 2× float4 instead of 8× float

*reinterpret_cast<float4*>(®ister_n[0]) =

*reinterpret_cast<const float4*>(&tile_b[dot_idx * BN + thread_col * TN]);

*reinterpret_cast<float4*>(®ister_n[4]) =

*reinterpret_cast<const float4*>(&tile_b[dot_idx * BN + thread_col * TN + 4]);

}

When you load a float4, the compiler emits a 128-bit vectorized instruction (LDG.E.128) instead of four 32-bit loads (LDG.E):

| Load Type | Size | Instruction | Elements | Instructions Needed |

|---|---|---|---|---|

float | 32 bits | LDG.E | 1 | 4 (for 4 elements) |

float2 | 64 bits | LDG.E.64 | 2 | 2 (for 4 elements) |

float4 | 128 bits | LDG.E.128 | 4 | 1 (for 4 elements) |

Vectorized loads only work efficiently when data is properly aligned to vector size e.g. 16 bytes for

float4and access patterns respect alignment boundaries

1

2

3

4

5

6

7

8

9

10

/*

- 1 vectorized load instruction

- Guaranteed single 128-bit memory transaction

- Hardware-enforced coalescing

*/

float4 vec = *reinterpret_cast<const float4*>(&A[i]); // LDG.E.128 (128-bit)

float a = vec.x;

float b = vec.y;

float c = vec.z;

float d = vec.w;

Trade-Offs: Vectorized

float4loads reduce memory transactions but increase register pressure (potentially lowering occupancy), require tail handling for non-divisible sizes, and may not benefit shared memory accesses with strided layouts without restructuring.NOTE: In our case, we will skip the tail handling and assume the inputs are aligned to the block sizes used in the kernel just to simplify the kernel

Kernel

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

template <const int BM, const int BN, const int BK, const int TM, const int TN>

__global__ void sgemm_vectorize_kernel(int num_rows_a, int num_cols_b, int num_cols_a,

float alpha, const float *matrix_a,

const float *matrix_b, float beta,

float *matrix_c)

{

const uint block_row = blockIdx.y;

const uint block_col = blockIdx.x;

// Thread indices for computing output tile

const uint thread_col = threadIdx.x % (BN / TN);

const uint thread_row = threadIdx.x / (BN / TN);

// Shared memory tiles - stored in column-major for A to enable coalescing

__shared__ float tile_a[BM * BK];

__shared__ float tile_b[BK * BN];

// Position matrix pointers at the start of this block's tile

matrix_a += block_row * BM * num_cols_a;

matrix_b += block_col * BN;

matrix_c += block_row * BM * num_cols_b + block_col * BN;

// Thread indices for vectorized loading

// Load 4 floats at a time using float4

const uint inner_row_a = threadIdx.x / (BK / 4);

const uint inner_col_a = threadIdx.x % (BK / 4);

const uint inner_row_b = threadIdx.x / (BN / 4);

const uint inner_col_b = threadIdx.x % (BN / 4);

// Allocate register storage

float thread_results[TM * TN] = {0.0f};

float register_m[TM] = {0.0f};

float register_n[TN] = {0.0f};

// Outer loop over K dimension

for (uint block_k_idx = 0; block_k_idx < num_cols_a; block_k_idx += BK) {

// Load tile_a using float4 vectorized loads

// Store in transposed layout: tile_a[col][row] for coalesced shared memory access

float4 tmp_a = reinterpret_cast<const float4*>(

&matrix_a[inner_row_a * num_cols_a + inner_col_a * 4])[0];

tile_a[(inner_col_a * 4 + 0) * BM + inner_row_a] = tmp_a.x;

tile_a[(inner_col_a * 4 + 1) * BM + inner_row_a] = tmp_a.y;

tile_a[(inner_col_a * 4 + 2) * BM + inner_row_a] = tmp_a.z;

tile_a[(inner_col_a * 4 + 3) * BM + inner_row_a] = tmp_a.w;

// Load tile_b using float4 vectorized loads

// Store in row-major layout: tile_b[row][col]

float4 tmp_b = reinterpret_cast<const float4*>(

&matrix_b[inner_row_b * num_cols_b + inner_col_b * 4])[0];

tile_b[inner_row_b * BN + inner_col_b * 4 + 0] = tmp_b.x;

tile_b[inner_row_b * BN + inner_col_b * 4 + 1] = tmp_b.y;

tile_b[inner_row_b * BN + inner_col_b * 4 + 2] = tmp_b.z;

tile_b[inner_row_b * BN + inner_col_b * 4 + 3] = tmp_b.w;

__syncthreads();

// Advance pointers for next tile

matrix_a += BK;

matrix_b += BK * num_cols_b;

for (uint dot_idx = 0; dot_idx < BK; ++dot_idx) {

// Load TM elements from tile_a (transposed layout)

#pragma unroll

for (uint i = 0; i < TM; ++i) {

register_m[i] = tile_a[dot_idx * BM + thread_row * TM + i];

}

// Load TN elements from tile_b

#pragma unroll

for (uint i = 0; i < TN; ++i) {

register_n[i] = tile_b[dot_idx * BN + thread_col * TN + i];

}

// Outer product accumulation

#pragma unroll

for (uint res_idx_m = 0; res_idx_m < TM; ++res_idx_m) {

#pragma unroll

for (uint res_idx_n = 0; res_idx_n < TN; ++res_idx_n) {

thread_results[res_idx_m * TN + res_idx_n] +=

register_m[res_idx_m] * register_n[res_idx_n];

}

}

}

__syncthreads();

}

// Write results with alpha/beta scaling

#pragma unroll

for (uint res_idx_m = 0; res_idx_m < TM; ++res_idx_m) {

#pragma unroll

for (uint res_idx_n = 0; res_idx_n < TN; ++res_idx_n) {

const uint c_idx = (thread_row * TM + res_idx_m) * num_cols_b +

(thread_col * TN + res_idx_n);

matrix_c[c_idx] = alpha * thread_results[res_idx_m * TN + res_idx_n] +

beta * matrix_c[c_idx];

}

}

}

We have removed the caller code since that is unchanged

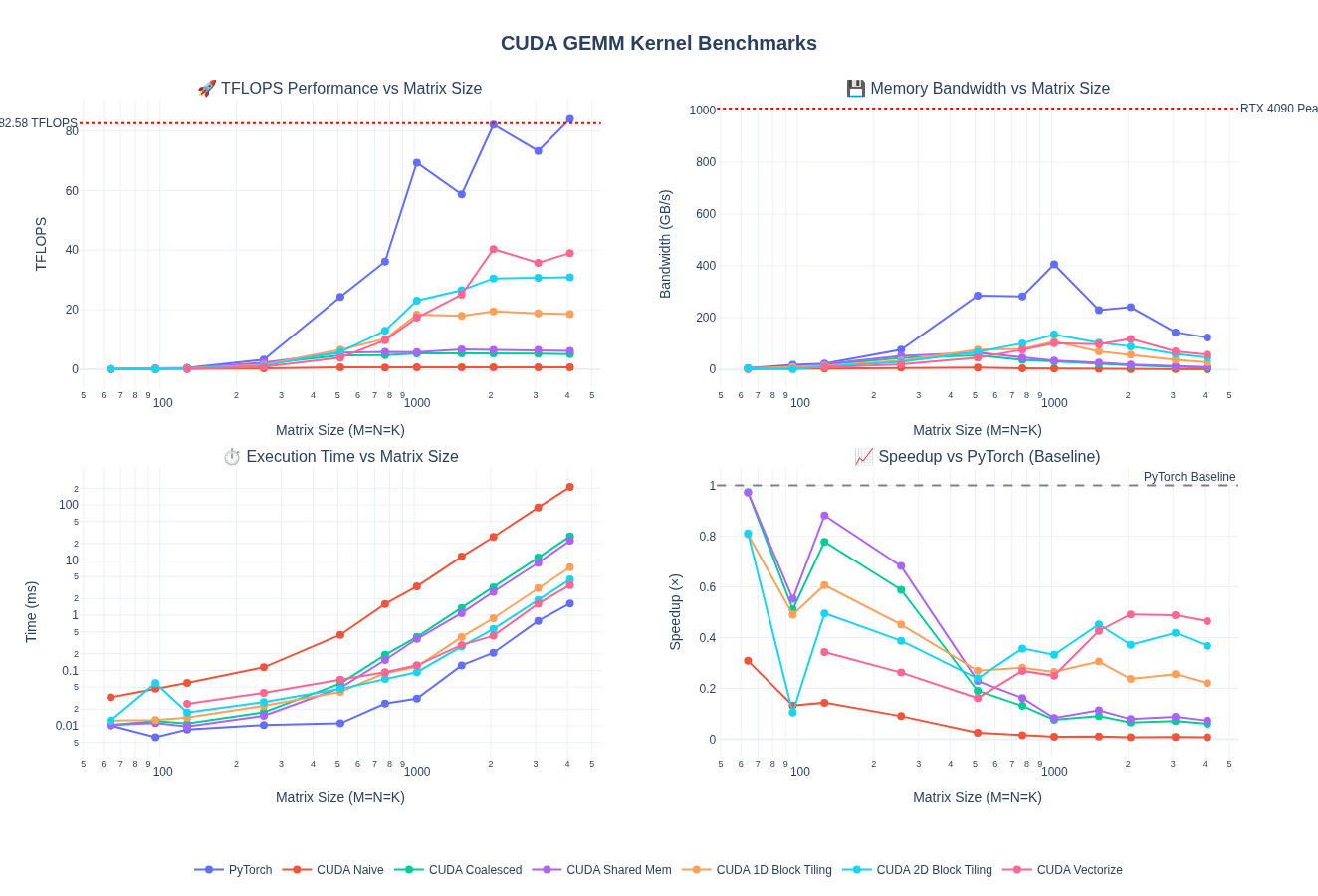

Performance Analysis

The vectorized kernel shows mixed results — performance degrades compared to 2D block tiling for small to medium matrices but shows significant improvements at larger sizes.

Performance at 4096×4096:

- 1.27× TFLOPS improvement over 2D block tiling (30.85 → 39.00 TFLOPS)

- 1.26× bandwidth improvement (45.19 → 57.14 GB/s)

- 46.3% of PyTorch’s performance (84.23 TFLOPS)

- 59.7× faster than naive (0.653 → 39.00 TFLOPS)

Performance Across Matrix Sizes

Comparison vs Previous Kernels (4096×4096):

| Kernel | Time (ms) | TFLOPS | Bandwidth (GB/s) | vs PyTorch | Speedup over Naive |

|---|---|---|---|---|---|

| Naive | 210.33 | 0.65 | 0.96 | 0.8% | 1.0× |

| Coalesced | 26.92 | 5.11 | 7.48 | 6.1% | 7.8× |

| Shared Memory | 22.42 | 6.13 | 8.98 | 7.3% | 9.4× |

| 1D Block Tiling | 7.44 | 18.48 | 27.07 | 21.9% | 28.3× |

| 2D Block Tiling | 4.46 | 30.85 | 45.19 | 36.6% | 47.3× |

| Vectorized | 3.52 | 39.00 | 57.14 | 46.3% | 59.7× |

| PyTorch | 1.63 | 84.23 | 123.38 | 100% | 129.0× |

Nice! We are at almost 60% for the peak performance for 4096 x 4096! Let’s keep going!

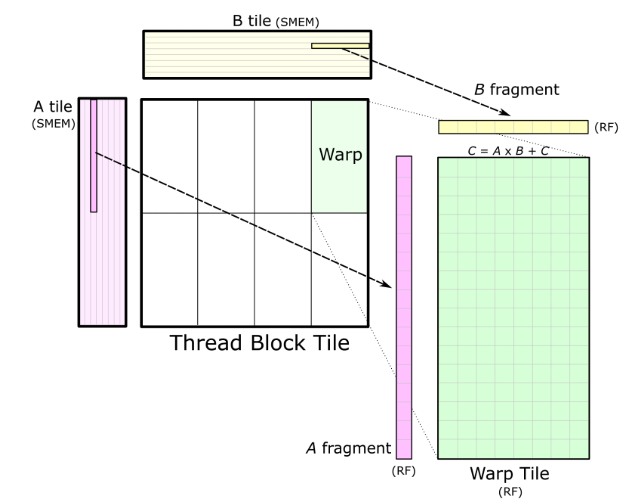

Warp Tiling

Concept

After optimizing thread-level and block-level tiling, the next step is warp-level tiling to exploit the natural 32-thread warp execution unit. This can be leveraged to achieve better register reuse and computation efficiency.

What is Warp Tiling?

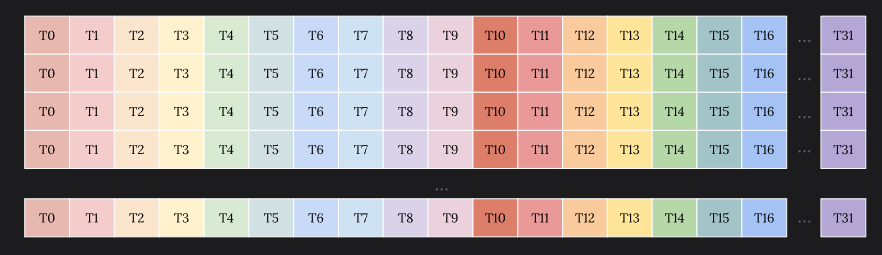

As we have discussed previously, a warp is the fundamental execution unit in NVIDIA GPUs consisting of 32 threads that execute in SIMT (Single Instruction, Multiple Thread) fashion. Warp tiling introduces an additional level in the memory hierarchy.

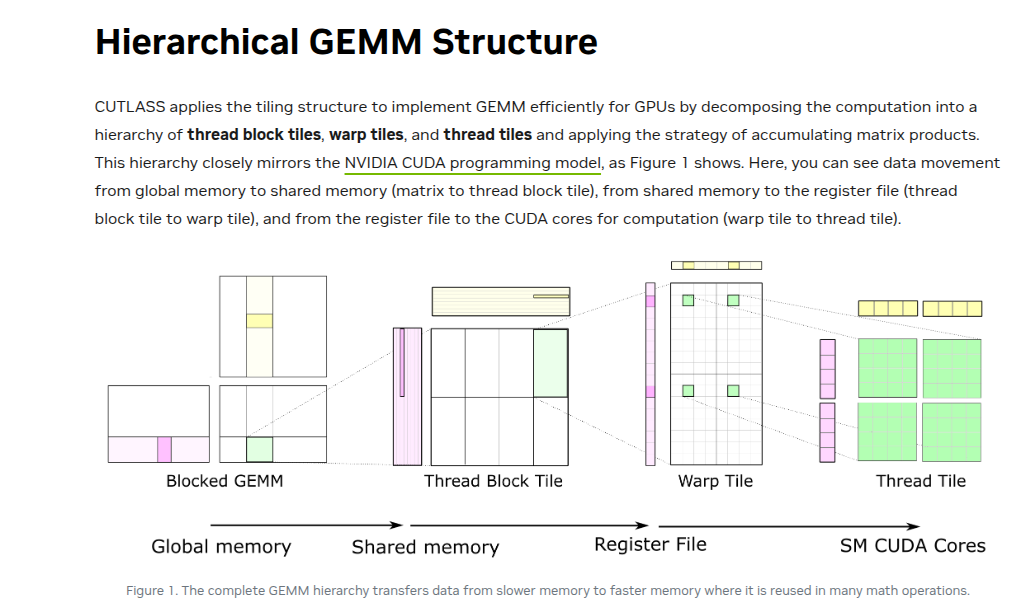

FWIW - we are slowly approaching cutlass territory. Cutlass library implements a sophisticated tiling strategy that mirrors the GPU’s memory hierarchy at multiple levels

Source: NVIDIA CUTLASS Blog

Source: NVIDIA CUTLASS Blog

To take advantage of this hierarchy, warps can offer another layer of tiling such that while loading data from shared memory into registers, we do it at warp tile level. In addition, each thread in the warp then calculates its own small fraction of computation.

Thread tile (next level tiling) is effectively 2D Tiling that we discussed in the previous section.

Pseudo code for this process looks like:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

for (k = 0; k < K; k += BK) {

// 1. Load A_tile[BM x BK] and B_tile[BK x BN] into shared memory

__syncthreads();

// 2. Each warp loads its own subtile of A and B into registers

// (from shared memory)

for (int kk = 0; kk < BK; ++kk) {

// 3. Each thread computes on its own 4x4 tile in registers

C_reg[m][n] += A_frag[m][kk] * B_frag[kk][n];

}

__syncthreads();

}

// 4. Write C_reg results from each thread to global C

In the later sections, we will look into Tensor Cores. Enabling warp-tiling is a key milestone for us to get there. But let’s first look at how this works through below visualization with sample values for tile widths and warp size:

NVIDIA CUTLASS Documentation has a lot more details and is pretty comprehensive in explaining different layers of tiling.

Kernel

NOTE: I have removed the non-block size mulitple kernel handling below since the code is getting fairly complicated with multiple layers of nesting for different levels of tiles. I will continue to ignore tail handling in subsequent sections.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

constexpr int WARPSIZE = 32;

/*

Warp-level tiling GEMM kernel.

Hierarchy: Block (BM x BN) → Warps (WM x WN) → Warp Subtiles (WSUBM x WSUBN) → Thread Tiles (TM x TN)

*/

template <const int BM, const int BN, const int BK, const int row_stride_a, const int row_stride_b>

__device__ void load_from_gmem(int num_cols_b, int num_cols_a,

const float *matrix_a, const float *matrix_b,

float *tile_a, float *tile_b,

int inner_row_a, int inner_col_a,

int inner_row_b, int inner_col_b)

{

for (uint offset = 0; offset + row_stride_a <= BM; offset += row_stride_a)

{

const float4 tmp_a = reinterpret_cast<const float4 *>(

&matrix_a[(inner_row_a + offset) * num_cols_a + inner_col_a * 4])[0];

tile_a[(inner_col_a * 4 + 0) * BM + inner_row_a + offset] = tmp_a.x;

tile_a[(inner_col_a * 4 + 1) * BM + inner_row_a + offset] = tmp_a.y;

tile_a[(inner_col_a * 4 + 2) * BM + inner_row_a + offset] = tmp_a.z;

tile_a[(inner_col_a * 4 + 3) * BM + inner_row_a + offset] = tmp_a.w;

}

for (uint offset = 0; offset + row_stride_b <= BK; offset += row_stride_b)

{

reinterpret_cast<float4 *>(

&tile_b[(inner_row_b + offset) * BN + inner_col_b * 4])[0] =

reinterpret_cast<const float4 *>(

&matrix_b[(inner_row_b + offset) * num_cols_b + inner_col_b * 4])[0];

}

}

template <const int BM, const int BN, const int BK, const int WM, const int WN,

const int WMITER, const int WNITER, const int WSUBM, const int WSUBN,

const int TM, const int TN>

__device__ void process_warp_tile(float *register_m, float *register_n, float *thread_results,

const float *tile_a, const float *tile_b,

const uint warp_row, const uint warp_col,

const uint thread_row_in_warp, const uint thread_col_in_warp)

{

for (uint dot_idx = 0; dot_idx < BK; ++dot_idx)

{

for (uint wsub_row_idx = 0; wsub_row_idx < WMITER; ++wsub_row_idx)

{

for (uint i = 0; i < TM; ++i)

{

register_m[wsub_row_idx * TM + i] =

tile_a[(dot_idx * BM) + warp_row * WM + wsub_row_idx * WSUBM +

thread_row_in_warp * TM + i];

}

}

for (uint wsub_col_idx = 0; wsub_col_idx < WNITER; ++wsub_col_idx)

{

for (uint i = 0; i < TN; ++i)

{

register_n[wsub_col_idx * TN + i] =

tile_b[(dot_idx * BN) + warp_col * WN + wsub_col_idx * WSUBN +

thread_col_in_warp * TN + i];

}

}

for (uint wsub_row_idx = 0; wsub_row_idx < WMITER; ++wsub_row_idx)

{

for (uint wsub_col_idx = 0; wsub_col_idx < WNITER; ++wsub_col_idx)

{

for (uint res_idx_m = 0; res_idx_m < TM; ++res_idx_m)

{

for (uint res_idx_n = 0; res_idx_n < TN; ++res_idx_n)

{

thread_results[(wsub_row_idx * TM + res_idx_m) * (WNITER * TN) +

(wsub_col_idx * TN) + res_idx_n] +=

register_m[wsub_row_idx * TM + res_idx_m] *

register_n[wsub_col_idx * TN + res_idx_n];

}

}

}

}

}

}

template <const int BM, const int BN, const int BK, const int WM, const int WN,

const int WNITER, const int TM, const int TN, const int NUM_THREADS>

__global__ void __launch_bounds__(NUM_THREADS)

sgemm_warptiling_kernel(int num_rows_a, int num_cols_b, int num_cols_a,

float alpha, const float *matrix_a, const float *matrix_b,

float beta, float *matrix_c)

{

const uint block_row = blockIdx.y;

const uint block_col = blockIdx.x;

const uint warp_idx = threadIdx.x / WARPSIZE;

const uint warp_col = warp_idx % (BN / WN);

const uint warp_row = warp_idx / (BN / WN);

constexpr uint WMITER = (WM * WN) / (WARPSIZE * TM * TN * WNITER);

constexpr uint WSUBM = WM / WMITER;

constexpr uint WSUBN = WN / WNITER;

const uint thread_idx_in_warp = threadIdx.x % WARPSIZE;

const uint thread_col_in_warp = thread_idx_in_warp % (WSUBN / TN);

const uint thread_row_in_warp = thread_idx_in_warp / (WSUBN / TN);

__shared__ float tile_a[BM * BK];

__shared__ float tile_b[BK * BN];

matrix_a += block_row * BM * num_cols_a;

matrix_b += block_col * BN;

matrix_c += (block_row * BM + warp_row * WM) * num_cols_b + block_col * BN + warp_col * WN;

const uint inner_row_a = threadIdx.x / (BK / 4);

const uint inner_col_a = threadIdx.x % (BK / 4);

constexpr uint row_stride_a = (NUM_THREADS * 4) / BK;

const uint inner_row_b = threadIdx.x / (BN / 4);

const uint inner_col_b = threadIdx.x % (BN / 4);

constexpr uint row_stride_b = NUM_THREADS / (BN / 4);

float thread_results[WMITER * TM * WNITER * TN] = {0.0f};

float register_m[WMITER * TM] = {0.0f};

float register_n[WNITER * TN] = {0.0f};

for (uint block_k_idx = 0; block_k_idx < num_cols_a; block_k_idx += BK)

{

load_from_gmem<BM, BN, BK, row_stride_a, row_stride_b>(

num_cols_b, num_cols_a, matrix_a, matrix_b, tile_a, tile_b,

inner_row_a, inner_col_a, inner_row_b, inner_col_b);

__syncthreads();

process_warp_tile<BM, BN, BK, WM, WN, WMITER, WNITER, WSUBM, WSUBN, TM, TN>(

register_m, register_n, thread_results, tile_a, tile_b,

warp_row, warp_col, thread_row_in_warp, thread_col_in_warp);

matrix_a += BK;

matrix_b += BK * num_cols_b;

__syncthreads();

}

for (uint wsub_row_idx = 0; wsub_row_idx < WMITER; ++wsub_row_idx)

{

for (uint wsub_col_idx = 0; wsub_col_idx < WNITER; ++wsub_col_idx)

{

float *matrix_c_interim = matrix_c + (wsub_row_idx * WSUBM) * num_cols_b +

wsub_col_idx * WSUBN;

for (uint res_idx_m = 0; res_idx_m < TM; res_idx_m += 1)

{

for (uint res_idx_n = 0; res_idx_n < TN; res_idx_n += 4)

{

float4 tmp_c = reinterpret_cast<float4 *>(

&matrix_c_interim[(thread_row_in_warp * TM + res_idx_m) * num_cols_b +

thread_col_in_warp * TN + res_idx_n])[0];

const int res_idx = (wsub_row_idx * TM + res_idx_m) * (WNITER * TN) +

wsub_col_idx * TN + res_idx_n;

tmp_c.x = alpha * thread_results[res_idx + 0] + beta * tmp_c.x;

tmp_c.y = alpha * thread_results[res_idx + 1] + beta * tmp_c.y;

tmp_c.z = alpha * thread_results[res_idx + 2] + beta * tmp_c.z;

tmp_c.w = alpha * thread_results[res_idx + 3] + beta * tmp_c.w;

reinterpret_cast<float4 *>(

&matrix_c_interim[(thread_row_in_warp * TM + res_idx_m) * num_cols_b +

thread_col_in_warp * TN + res_idx_n])[0] = tmp_c;

}

}

}

}

}

__launch_bounds__trick was something I learnt after reading a few kernels. It allows us to control kernel occupancy by setting a limit on per-thread register usage i.e by specifying the thread block size and the target number of blocks per SM, the compiler adjusts register allocation accordingly. This was a good resource to understand this attribute.

Caller

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

template <const int BM, const int BN, const int BK, const int WM, const int WN,

const int WNITER, const int TM, const int TN, const int NUM_THREADS>

void sgemm_warptiling(const torch::Tensor &matrix_a, const torch::Tensor &matrix_b,

torch::Tensor &output_matrix, float alpha, float beta)

{

// .. similar to previous kernels

constexpr int WMITER = (WM * WN) / (WARPSIZE * TM * TN * WNITER);

constexpr int WSUBM = WM / WMITER;

constexpr int WSUBN = WN / WNITER;

static_assert(WMITER * WSUBM == WM, "WMITER * WSUBM must equal WM");

static_assert(WNITER * WSUBN == WN, "WNITER * WSUBN must equal WN");

static_assert((BM % WM == 0) && (BN % WN == 0), "Block tile must be divisible by warp tile");

static_assert((WSUBM % TM == 0) && (WSUBN % TN == 0), "Warp subtile must be divisible by thread tile");

// Configure kernel launch

dim3 block_dim(NUM_THREADS);

dim3 grid_dim(CEIL_DIV(num_cols_b, BN), CEIL_DIV(num_rows_a, BM));

// Launch kernel

sgemm_warptiling_kernel<BM, BN, BK, WM, WN, WNITER, TM, TN, NUM_THREADS>

<<<grid_dim, block_dim>>>(

num_rows_a, num_cols_b, num_cols_a,

alpha, d_matrix_a, d_matrix_b, beta, d_output_matrix);

}

// Default configuration wrapper

void sgemm_warptiling_default(const torch::Tensor &matrix_a, const torch::Tensor &matrix_b,

torch::Tensor &output_matrix, float alpha, float beta)

{

// Default configuration: BM=128, BN=128, BK=16, WM=64, WN=64, WNITER=4, TM=8, TN=4, NUM_THREADS=128

// This gives: WMITER=2, WSUBM=32, WSUBN=16

// Warps per block: (128*128)/(64*64) = 4 warps

// Threads needed: 4 warps * 32 threads/warp = 128 threads

sgemm_warptiling<128, 128, 16, 64, 64, 4, 8, 4, 128>(

matrix_a, matrix_b, output_matrix, alpha, beta);

}

Performance Analysis

The warp tiling kernel shows further performance gains at large matrix sizes, achieving the best performance so far.

Performance at 4096×4096:

- 1.17× TFLOPS improvement over vectorized kernel (39.07 → 45.82 TFLOPS)

- 1.17× bandwidth improvement (57.24 → 67.12 GB/s)

- 54.4% of PyTorch’s performance (84.19 TFLOPS)

- 70.1× faster than naive (0.654 → 45.82 TFLOPS)

Comparison vs Previous Kernels (4096×4096):

| Kernel | Time (ms) | TFLOPS | Bandwidth (GB/s) | vs PyTorch | Speedup over Naive |

|---|---|---|---|---|---|

| Naive | 210.29 | 0.65 | 0.96 | 0.8% | 1.0× |

| Coalesced | 26.95 | 5.10 | 7.47 | 6.1% | 7.8× |

| Shared Memory | 22.40 | 6.14 | 8.99 | 7.3% | 9.4× |

| 1D Block Tiling | 7.42 | 18.52 | 27.13 | 22.0% | 28.3× |

| 2D Block Tiling | 4.45 | 30.89 | 45.25 | 36.7% | 47.2× |

| Vectorized | 3.52 | 39.07 | 57.24 | 46.4% | 59.8× |

| Warp Tiling | 3.00 | 45.82 | 67.12 | 54.4% | 70.1× |

| PyTorch | 1.63 | 84.19 | 123.33 | 100% | 128.8× |

Performance Across Matrix Sizes

| Matrix Size | Time (ms) | TFLOPS | vs PyTorch | Speedup vs 2D Tiling |

|---|---|---|---|---|

| 128×128 | 0.021 | 0.20 | 40.2% | 0.81× |

| 512×512 | 0.058 | 4.60 | 18.9% | 0.79× |

| 1024×1024 | 0.103 | 20.77 | 29.8% | 0.90× |

| 2048×2048 | 0.396 | 43.38 | 52.6% | 1.14× |

| 4096×4096 | 3.00 | 45.82 | 54.4% | 1.17× |

| 8192×8192 | 25.69 | 42.80 | 50.9% | 1.12× |

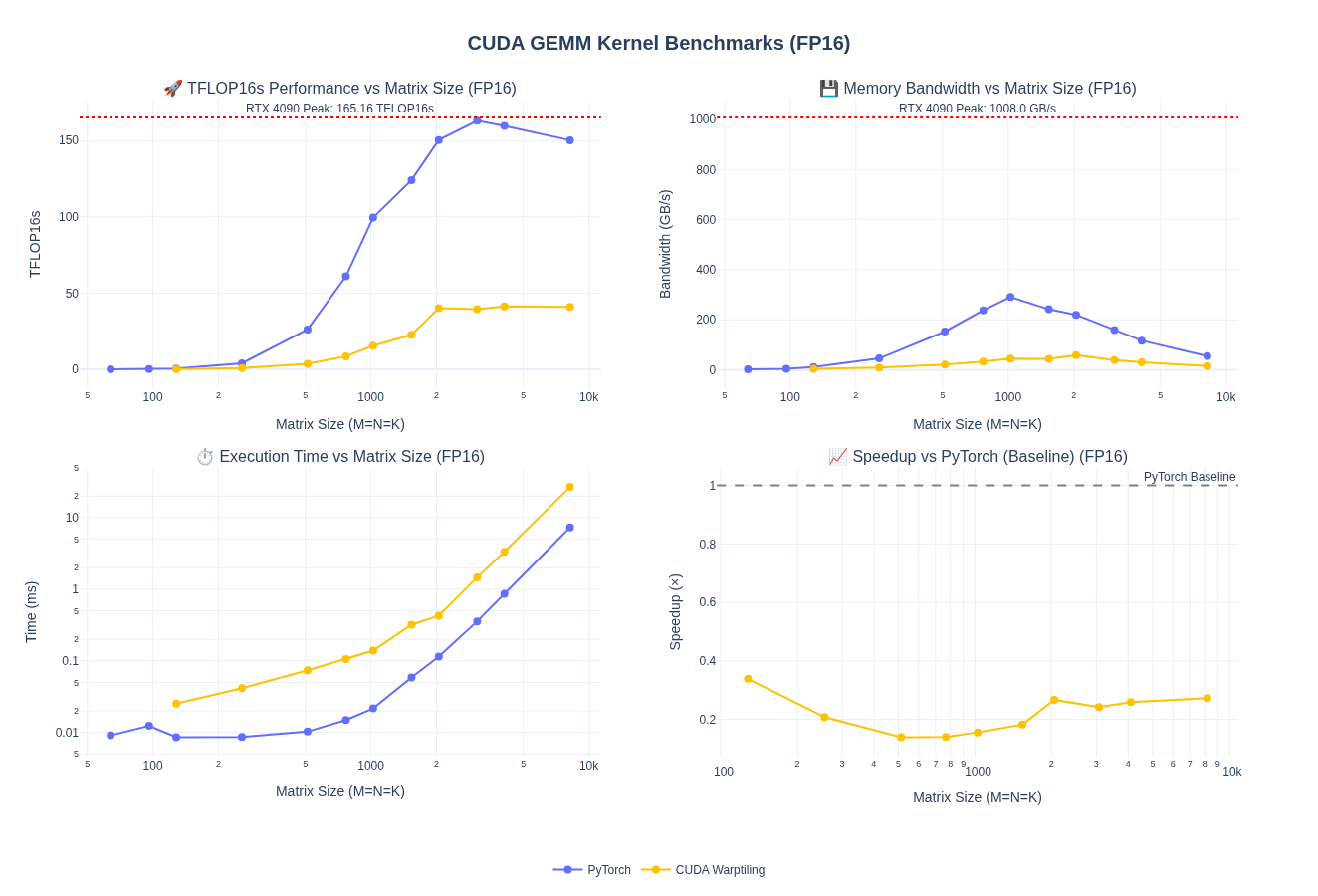

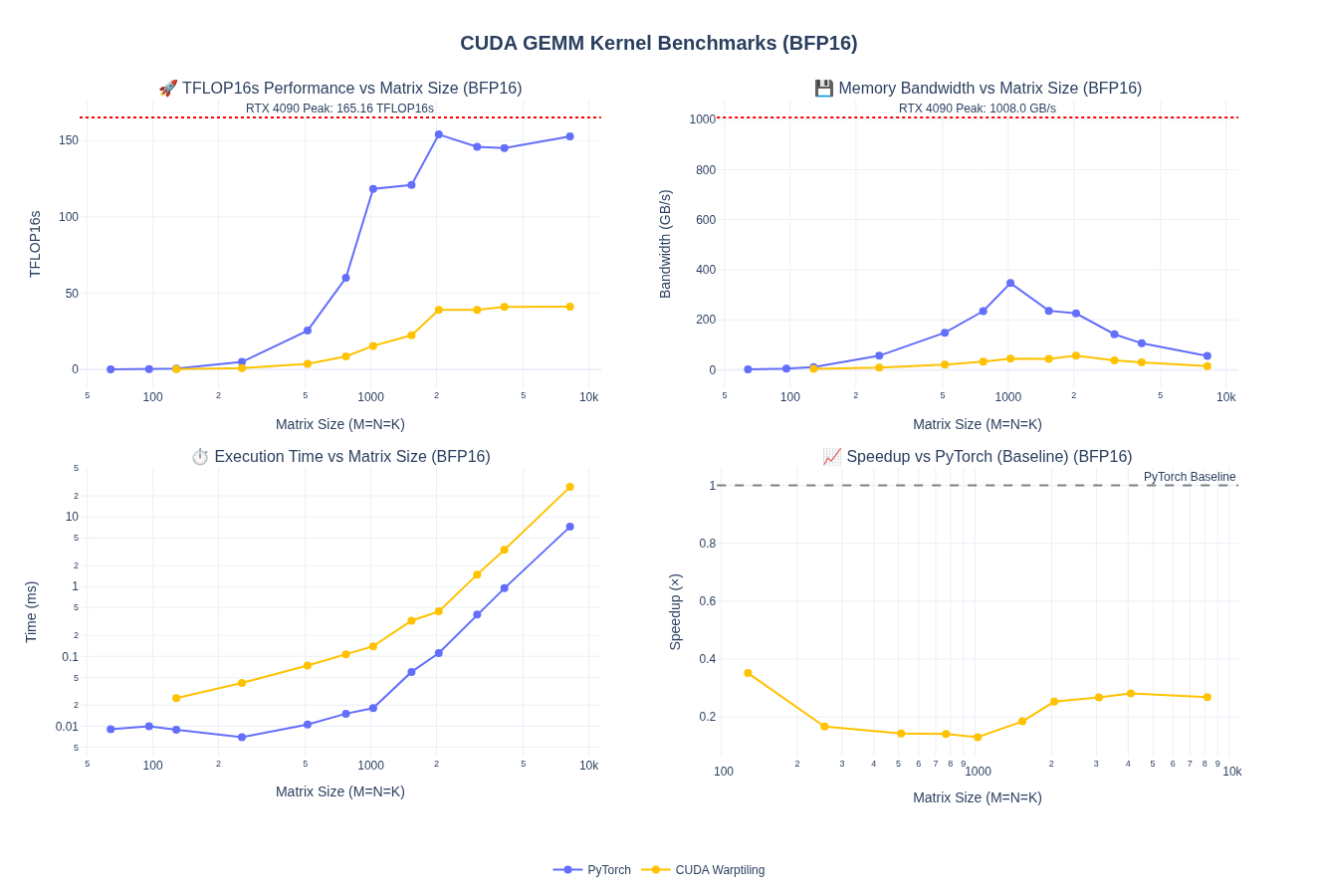

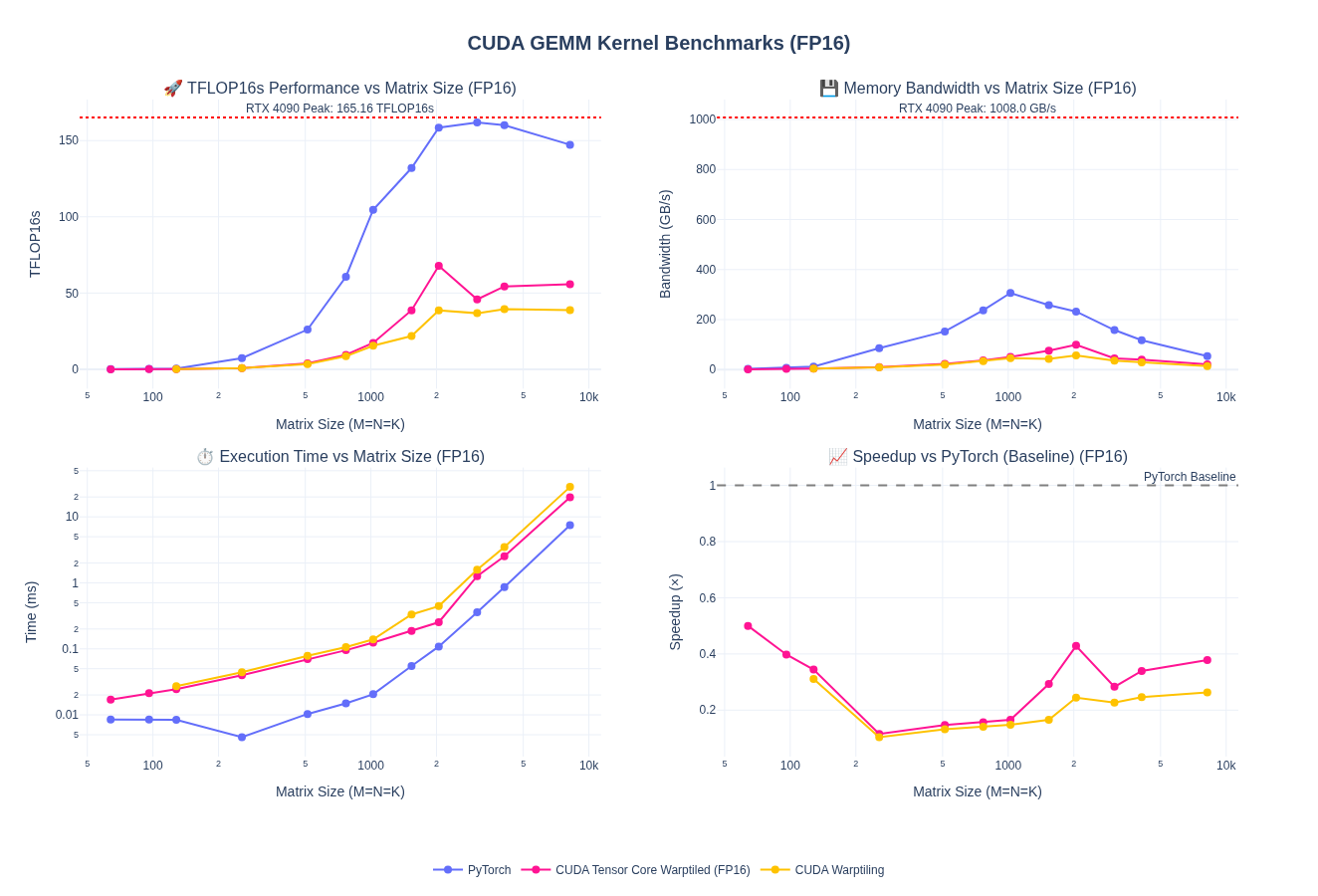

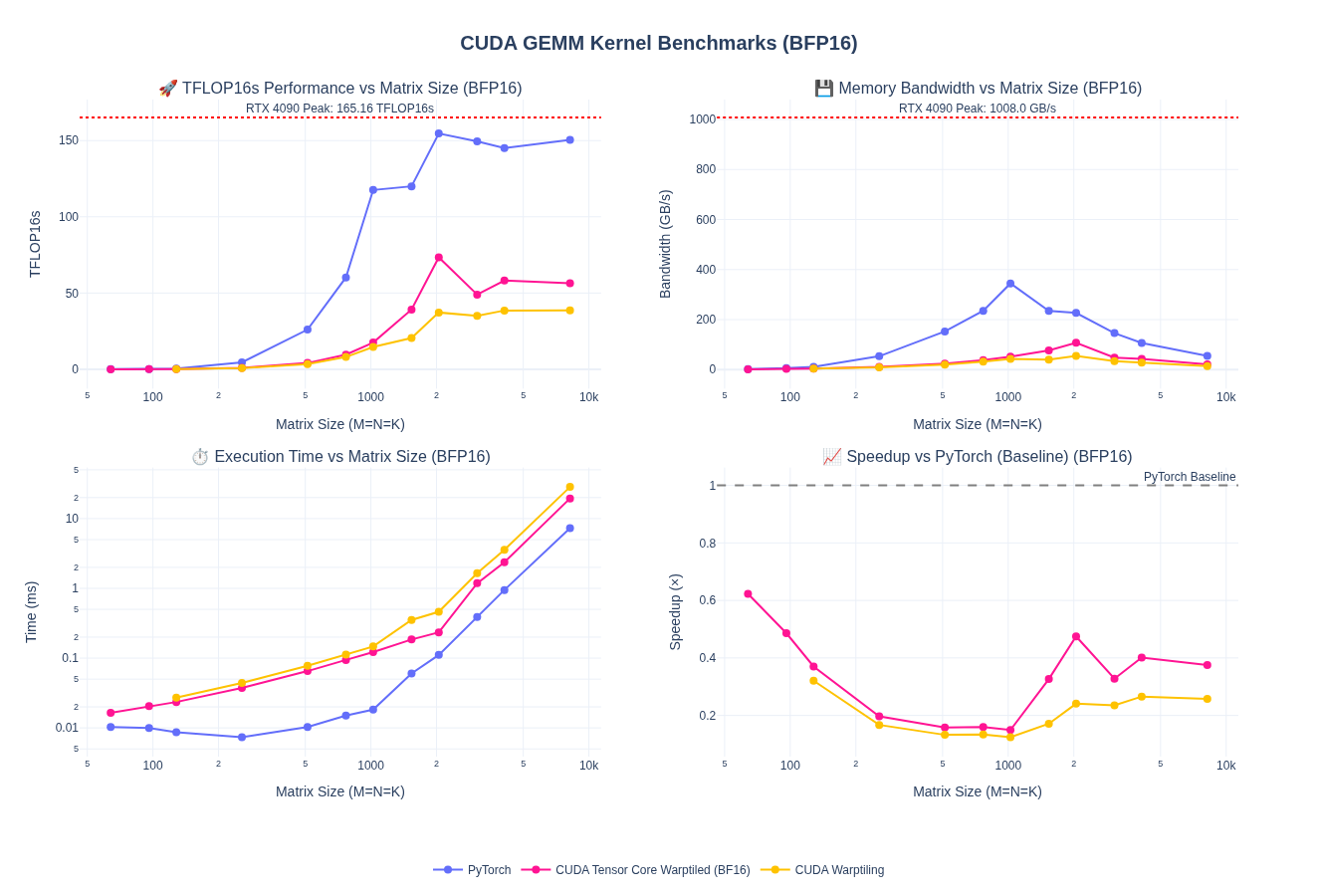

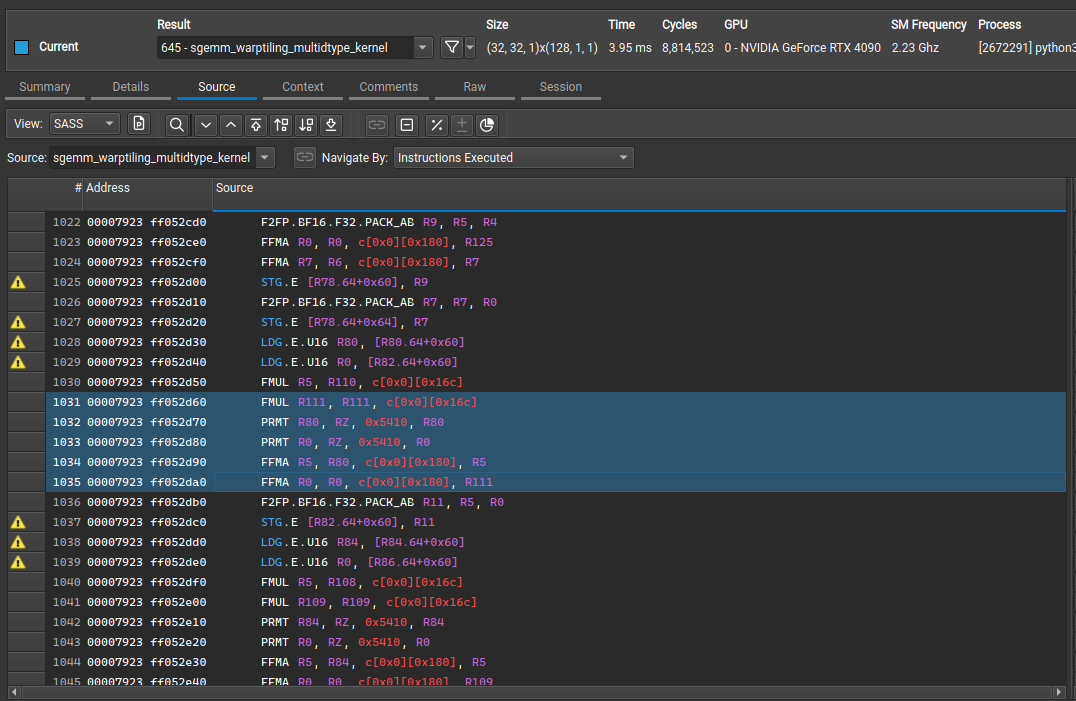

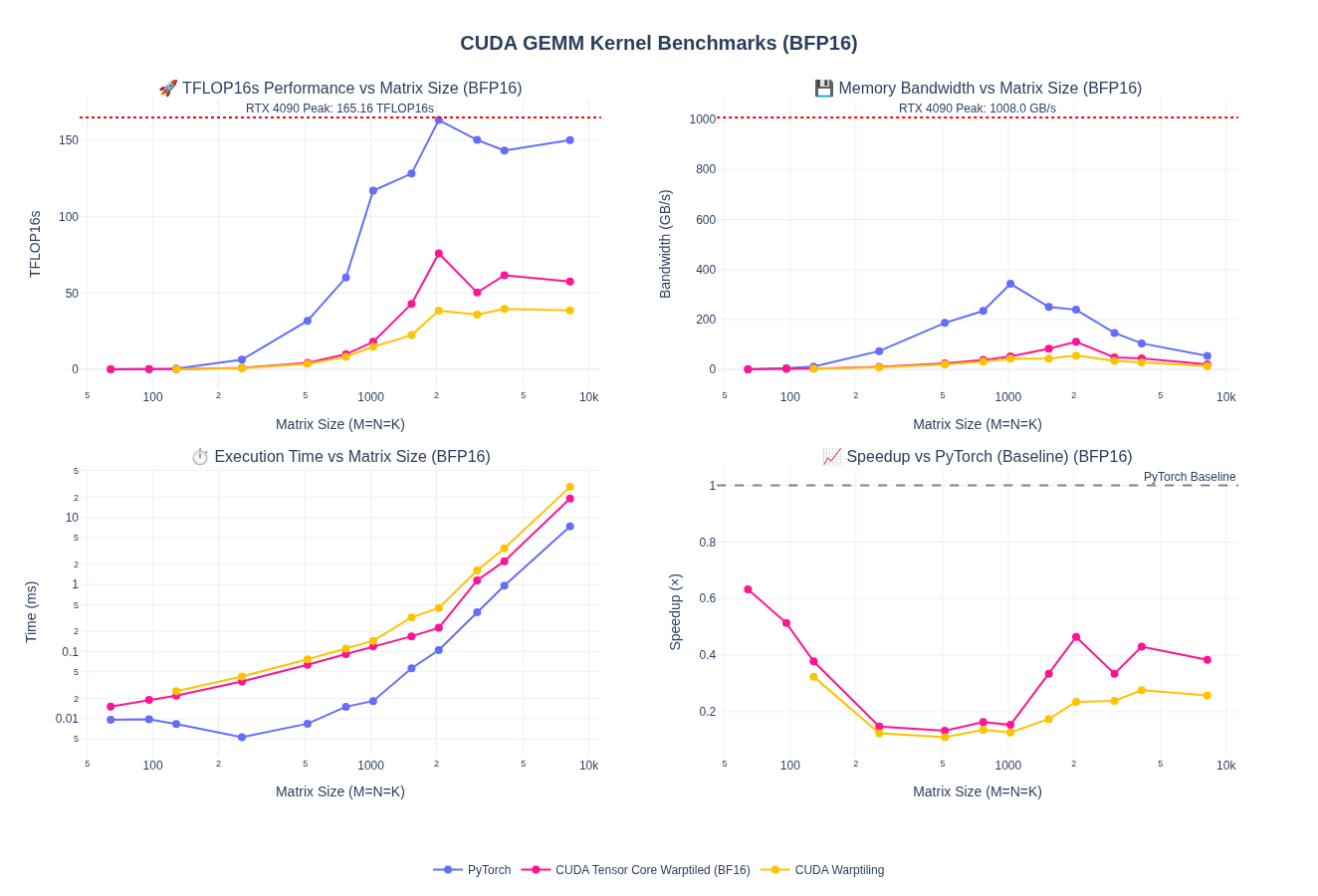

Supporting 16-bit GEMM

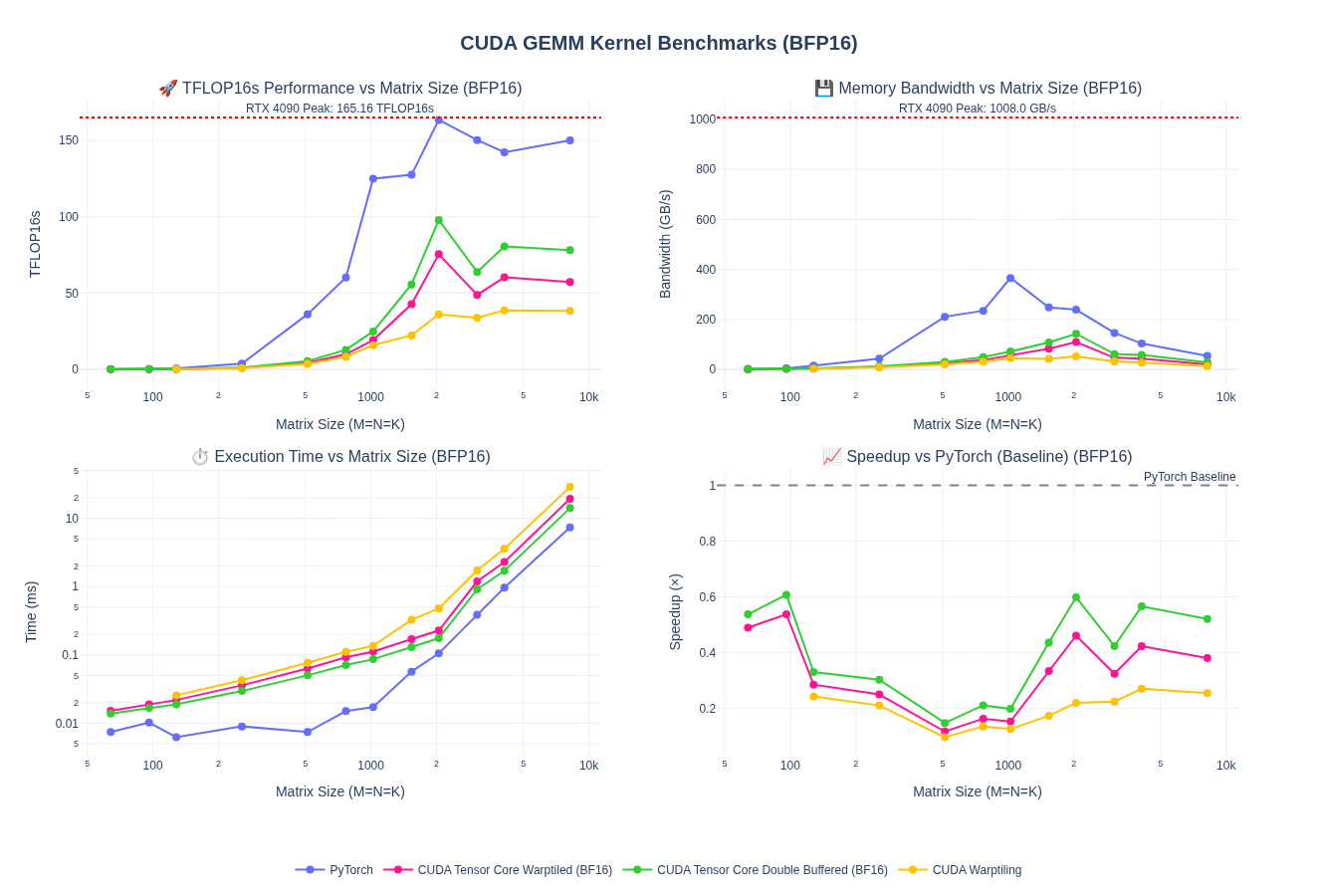

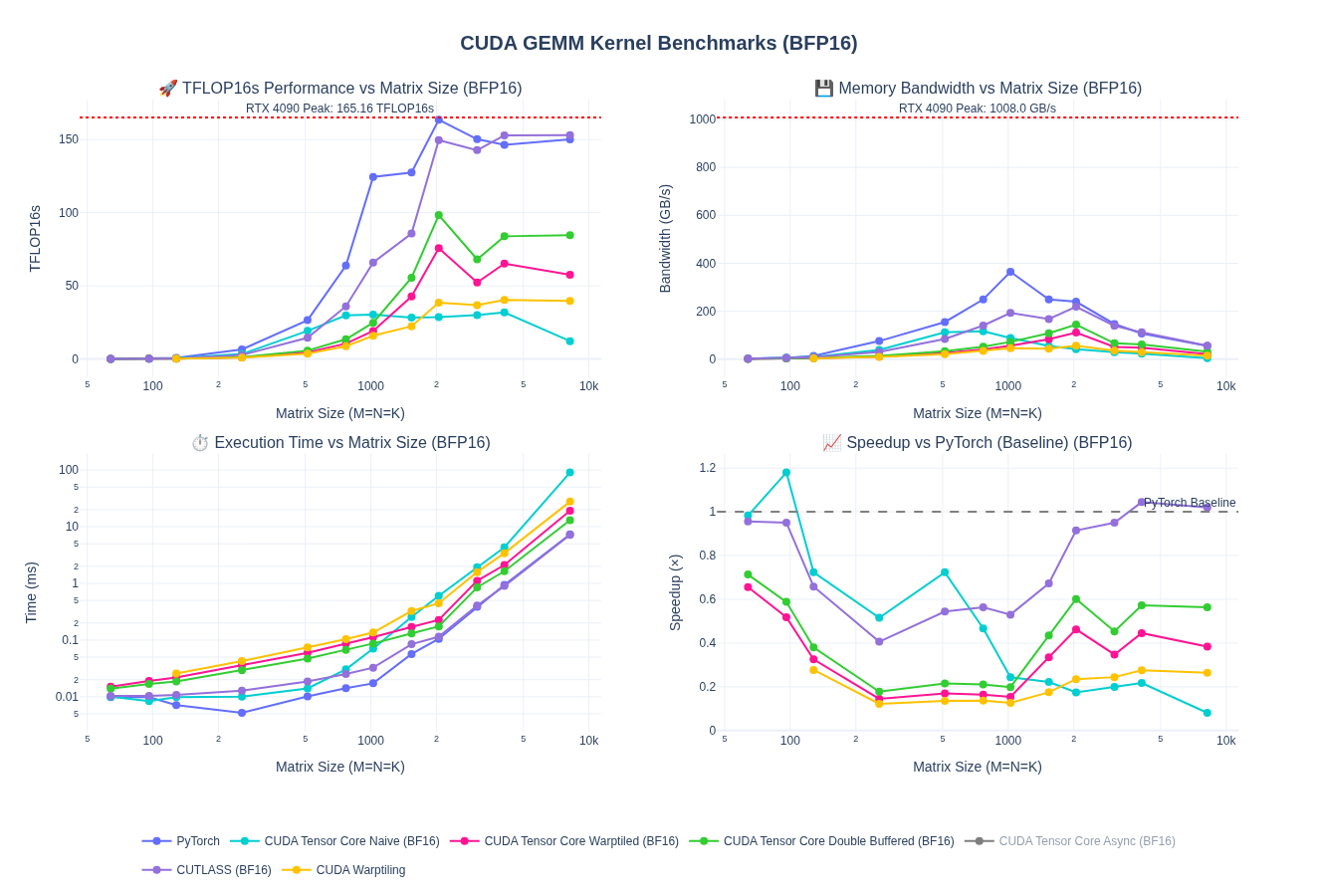

So far, we have worked only on fp32 kernels. Most workloads today increasingly use 16-bit floating-point formats (FP16 and BF16) and even lower precisions such as fp8, fp4, etc to reduce memory bandwidth requirements and increase throughput. While our warp tiling kernel works for FP32, we can extend it to support lower-precision computations in 16-bit. This mostly requires us adapt the kernel to handle multiple dtypes, which is just templating. Although, one thing to note is to get good numeric behavior on the lower prevision kernels, we need to ensure we do accumulation in higher precision such as fp32.

I am leaving out the kernel implementation for this part so we can focus on WMMA and Tensor Cores next. However, here is the baseline performance after porting warptiling technique for fp32 above to fp16/bf16. Spoiler alert: the performance is pretty bad and we are down to about 1/4th of pytorch performance.

NOTE: I haven’t done any tuning of the tile sizes here - just took fp32 kernel as is and update types and handled vectorized loads using float2.

Quick Takeaway: without tensorcores we can’t match the performance of pytorch. So, let’s look at that next!!

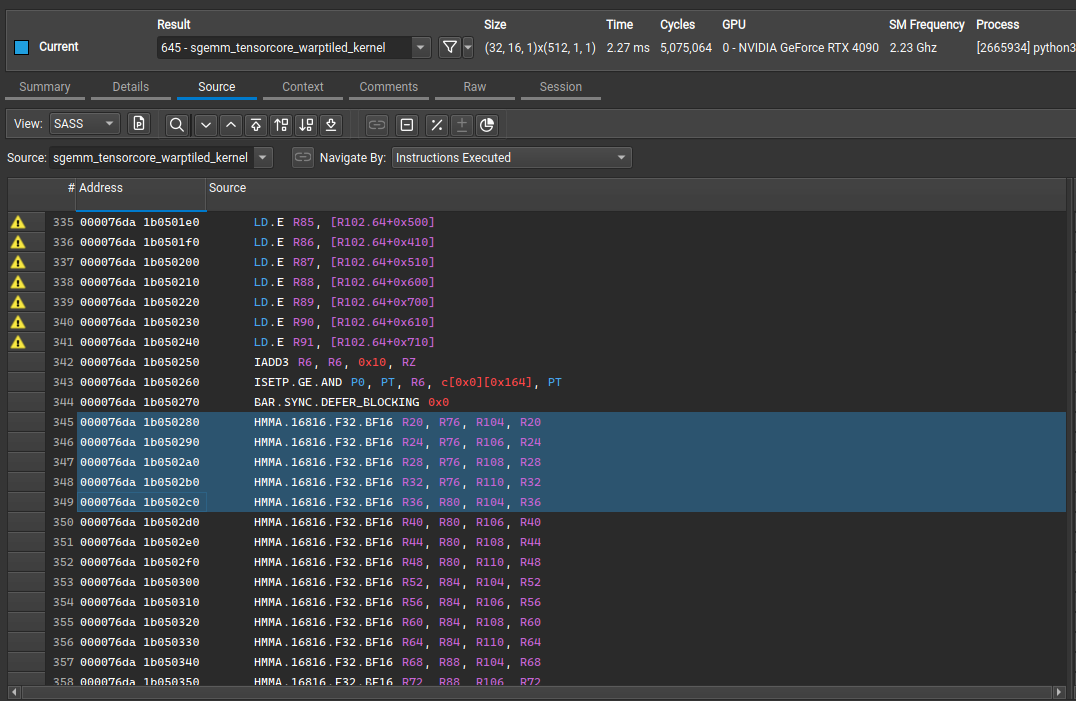

WMMA and Tensorcores

The warp-tiling structure that we discussed earlier can also be implemented using nvidia WMMA api (Warp Matrix Multiply-Accumulate). WMMA provides extensions to the tiling structure and exposes Tensorcore MMA operations.

NOTE: CUTLASS provide even more high-level abstractions for GEMMs. We will discuss that later

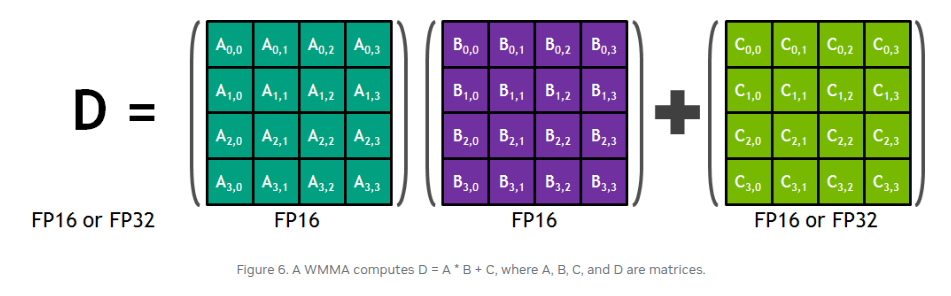

Before we dig further into WMMA, let’s look at what are Tensorcores. At a high level, Tensorcores provide warp-level collective operation for MMA such that 32 threads within a warp collectively hold MMA operands. In other words, the thread-tiling register based outer product can be lowered all the way to the hardware using Tensorcores. Below we represent 4 x 4 x 4 matrix processing array performing D = A * B + C.

Digging a little into specific, here is a good common use case for fp16 matmul with fp32 accumulation:

can be codified as

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

float D[4];

uint32_t const A[2];

uint32_t const B;

float const C[4];

// Example targets 16-by-8-by-8 Tensor Core operation

asm(

"mma.sync.aligned.m16n8k8.row.col.f32.f16.f16.f32 "

" { %0, %1, %2, %3 }, "

" { %4, %5}, "

" %6, "

" { %7, %8, %9, %10 };"

:

"=f"(D[0]), "=f"(D[1]), "=f"(D[2]), "=f"(D[3])

:

"r"(A[0]), "r"(A[1]),

"r"(B),

"f"(C[0]), "f"(C[1])

);

Here,

mma.sync.aligned.m16n8k8.row.col.f32.f16.f16.f32can be understood asmma.sync.alignedinstruction over matrix dimentsion ofM=16, N=8, K=8 (computes C[16×8] = A[16×8] × B[8×8] + C[16×8]).row.colrepresents row-major/column-major layouts for A and B, respectivelly and finallyf32.f16.f16.f32represents data types such that output is 32-bit float (fp32) with A and B as fp16 and accumulation happening in fp32.

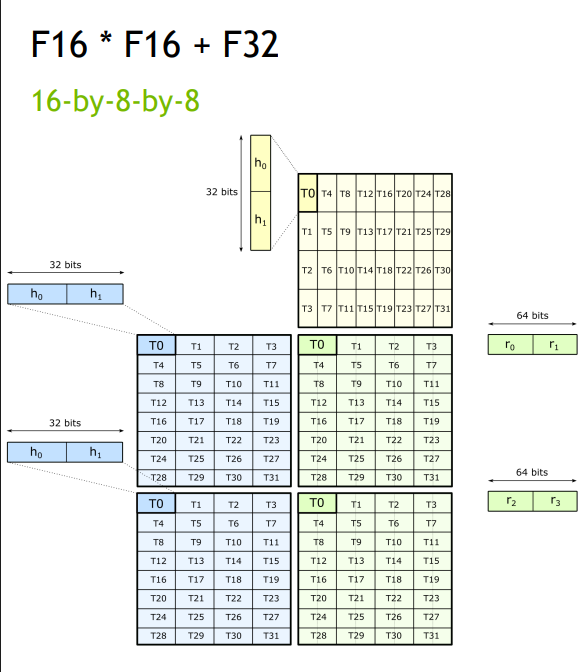

SM80 TC Instruction

Below is the Tensorcore operation on my RTX 4090 for Ada.

More details on Tensorcore instructions in this GTC talk from 2019.

WMMA GEMM Kernel

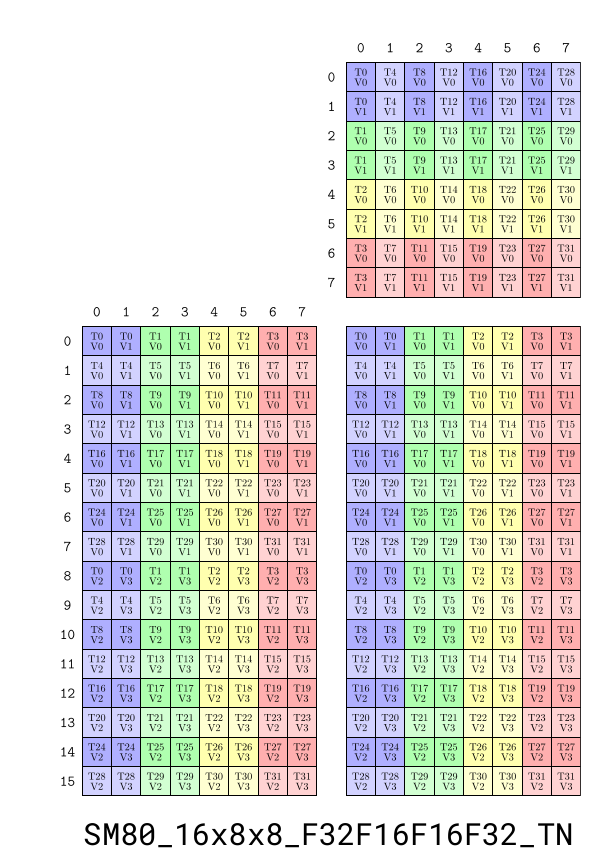

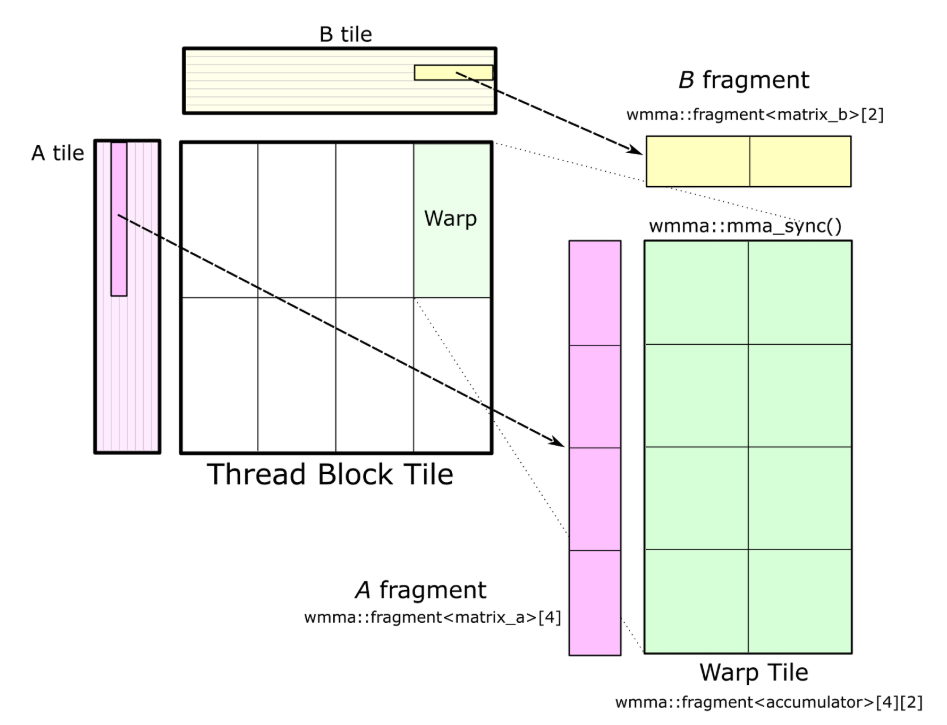

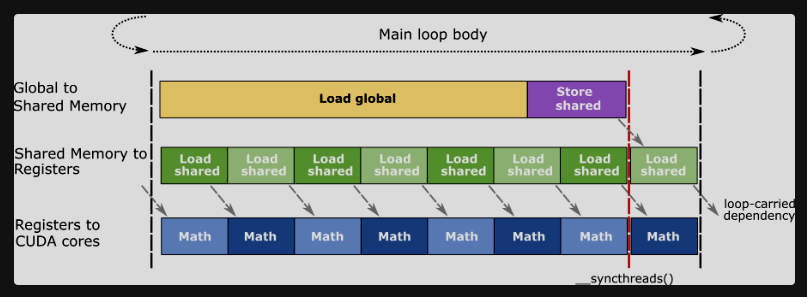

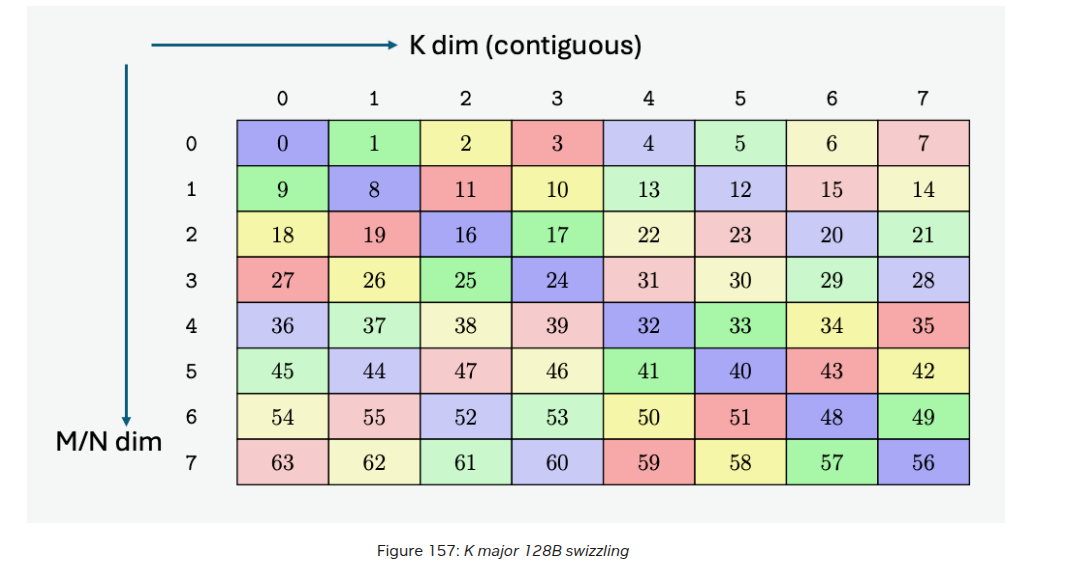

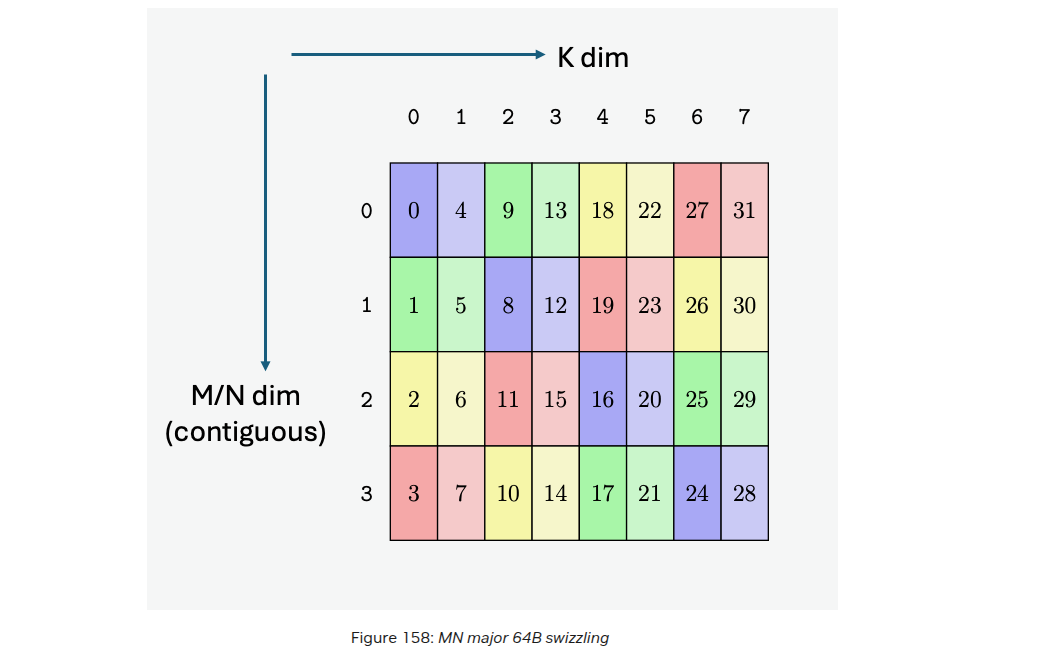

So, as we see above, the general idea is that NVidia provides instruction sets for Tensor Cores to do warp level matmuls that allow us to calculate thread tile matrix multiplications in single instructions. These instructions allow warp-wide MMA operations. Quoted from original Cutlass post:

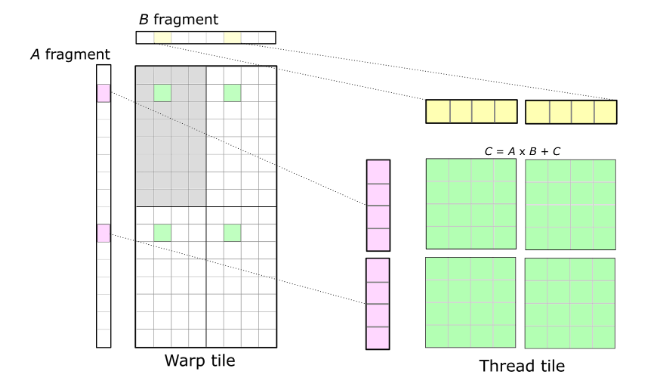

Figure shows the warp tile structure that targets the CUDA WMMA API. Calls to

wmma::load_matrix_syncload fragments of A and B into instances of thenvcuda::wmma::fragment<>template, and the accumulator elements for the warp tile are structured as an array ofnvcuda::wmma::fragment<accumulator>objects. These fragments store a 2D matrix distributed among the threads of the warp. Finally, calls tonvcuda::wmma::mma_sync()for each accumulator fragment (and corresponding fragments from A and B) compute the warp-wide matrix multiply-accumulate operation using Tensor Cores.

Now, using reference naive tensorcore kernel using wmma, the baseline performance was pretty bad. So next, let’s transform our original warp-tiled kernel for 16-bit precision to wmma api. We should be able to map tile sizes directly to wmma api.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

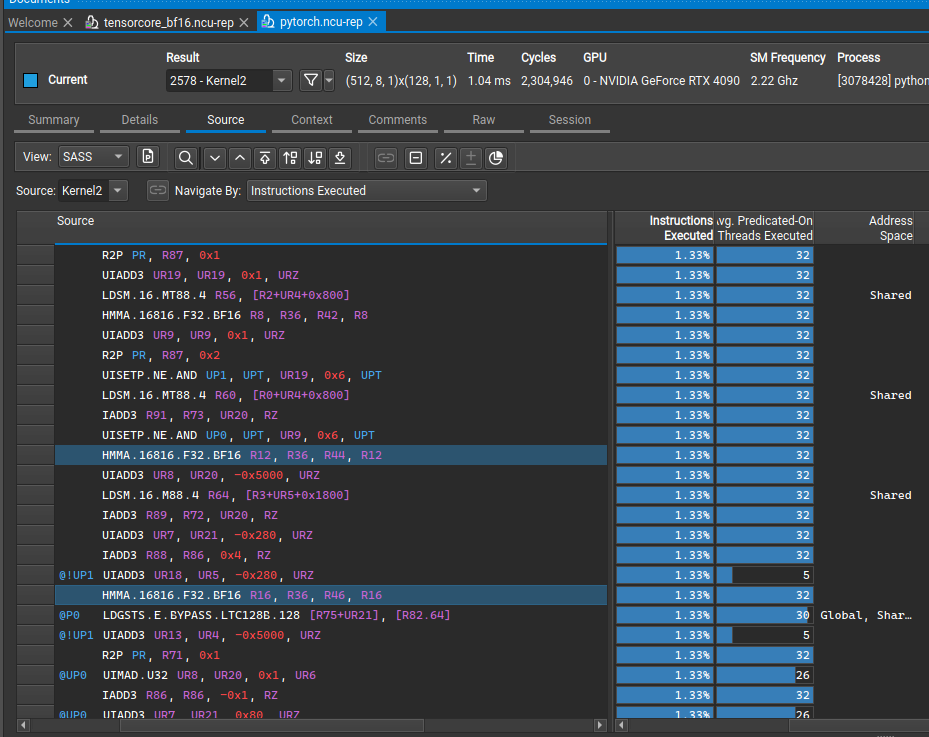

template <typename InputType,

const int BLOCK_ROW_WARPS = 4,

const int BLOCK_COL_WARPS = 4,

const int WARP_ROW_TILES = 4,

const int WARP_COL_TILES = 2,

const int WMMA_M = 16,

const int WMMA_N = 16,

const int WMMA_K = 16>

__global__ void

sgemm_tensorcore_warptiled_kernel(int num_cols_b, int num_cols_a,

float alpha, const InputType *matrix_a,

const InputType *matrix_b, float beta,

float *matrix_c)

{

const uint warp_id = threadIdx.x / 32;

const uint warp_row = warp_id / BLOCK_COL_WARPS;

const uint warp_col = warp_id % BLOCK_COL_WARPS;

constexpr int BLOCK_ROW_TILES = WARP_ROW_TILES * BLOCK_ROW_WARPS;

constexpr int BLOCK_COL_TILES = WARP_COL_TILES * BLOCK_COL_WARPS;

constexpr int BM = BLOCK_ROW_TILES * WMMA_M;

constexpr int BN = BLOCK_COL_TILES * WMMA_N;

constexpr int BK = WMMA_K;

// Shared memory: tile_a (BM x BK, row-major), tile_b (BK x BN, column-major)

__shared__ InputType tile_a[BM * BK];

__shared__ InputType tile_b[BK * BN];

const InputType *global_a = matrix_a;

const InputType *global_b = matrix_b;

float *global_c = matrix_c;

nvcuda::wmma::fragment<nvcuda::wmma::matrix_a, WMMA_M, WMMA_N, WMMA_K, InputType, nvcuda::wmma::row_major> a_frag;

nvcuda::wmma::fragment<nvcuda::wmma::matrix_b, WMMA_M, WMMA_N, WMMA_K, InputType, nvcuda::wmma::col_major> b_frag;

// Accumulator fragments (FP32): each warp maintains WARP_ROW_TILES x WARP_COL_TILES tiles

nvcuda::wmma::fragment<nvcuda::wmma::accumulator, WMMA_M, WMMA_N, WMMA_K, float> acc_frag[WARP_ROW_TILES][WARP_COL_TILES];

nvcuda::wmma::fragment<nvcuda::wmma::accumulator, WMMA_M, WMMA_N, WMMA_K, float> c_frag;

#pragma unroll

for (int i = 0; i < WARP_ROW_TILES; ++i)

{

#pragma unroll

for (int j = 0; j < WARP_COL_TILES; ++j)

{

nvcuda::wmma::fill_fragment(acc_frag[i][j], 0.0f);

}

}

constexpr int NUM_THREADS = BLOCK_ROW_WARPS * BLOCK_COL_WARPS * 32; // warps per block * threads per warp

// K-loop: iterate by BK, load A and B tiles, compute WMMA operations

for (int block_k_idx = 0; block_k_idx < num_cols_a; block_k_idx += BK)

{

// Load A tile (BM x BK, row-major)